How to see properly: Adversarial defense by data inspection

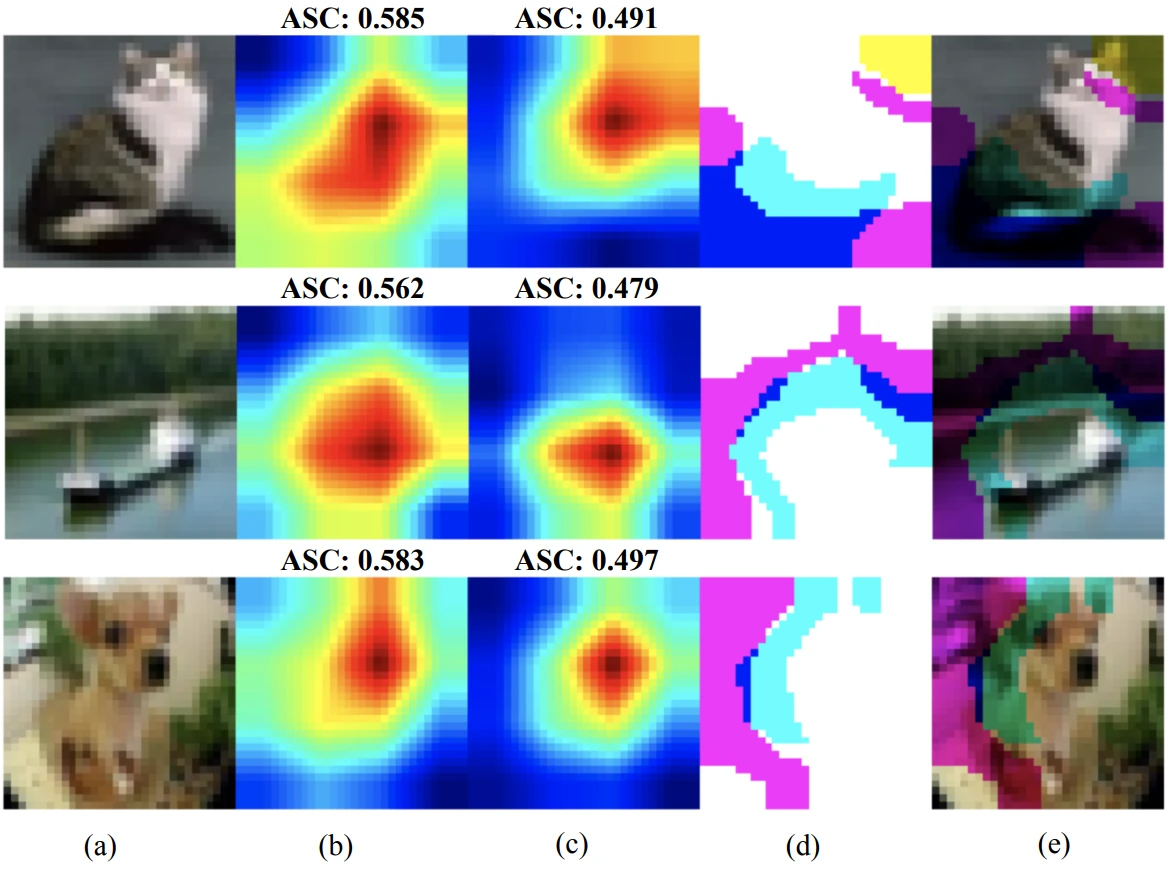

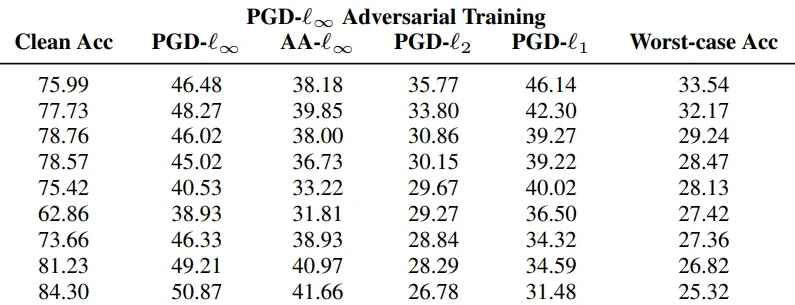

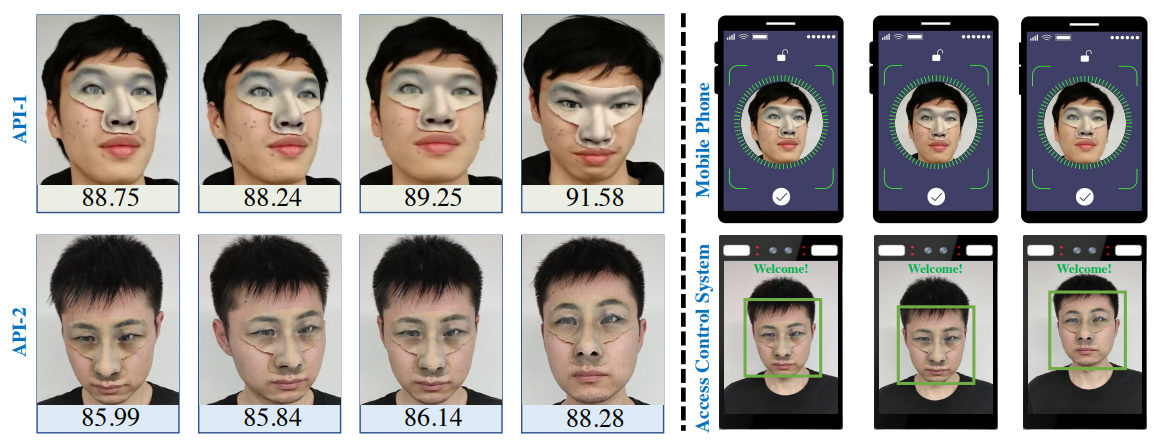

Data inspection is a promising adversarial defense technique. Inspecting the data properly can reveal and even remove adversarial attacks. This post summarizes data inspection work from CVPR ’23.