How to intuitively understand adversarial attacks on AI models

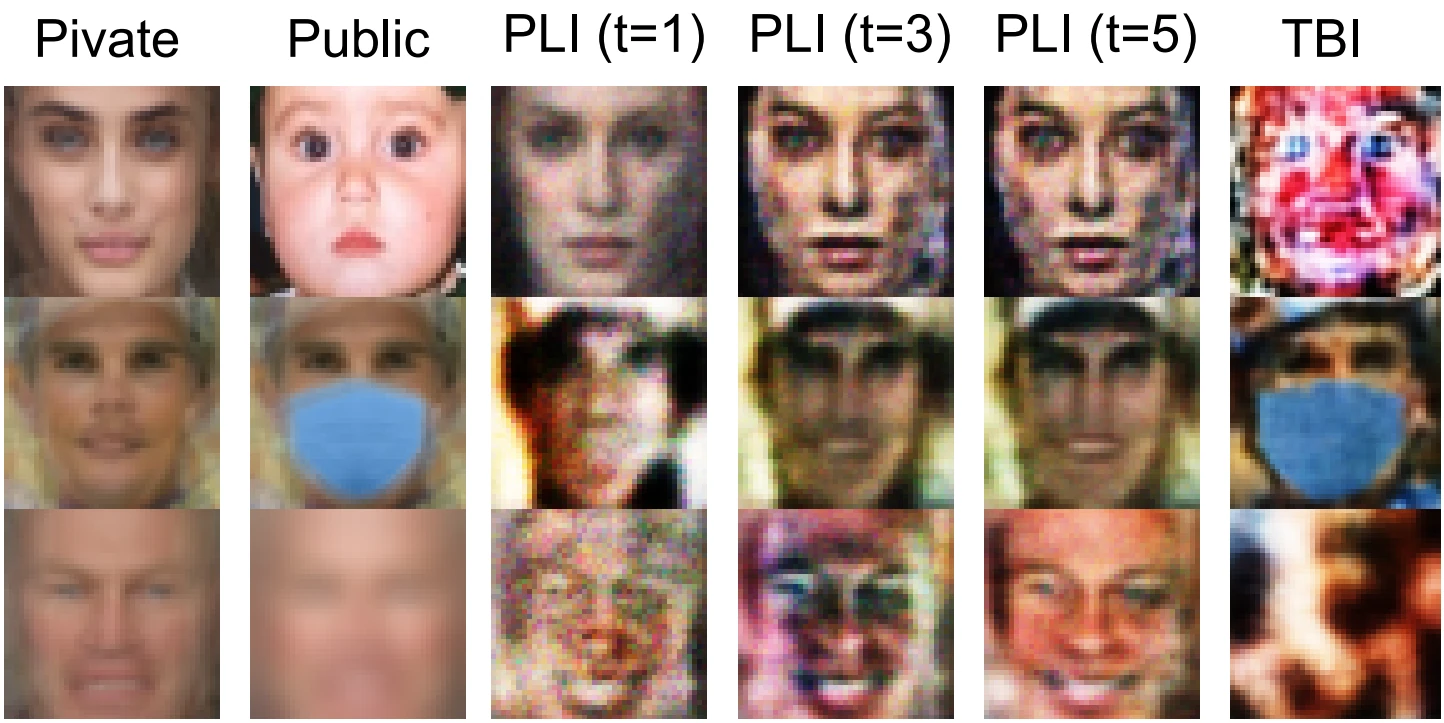

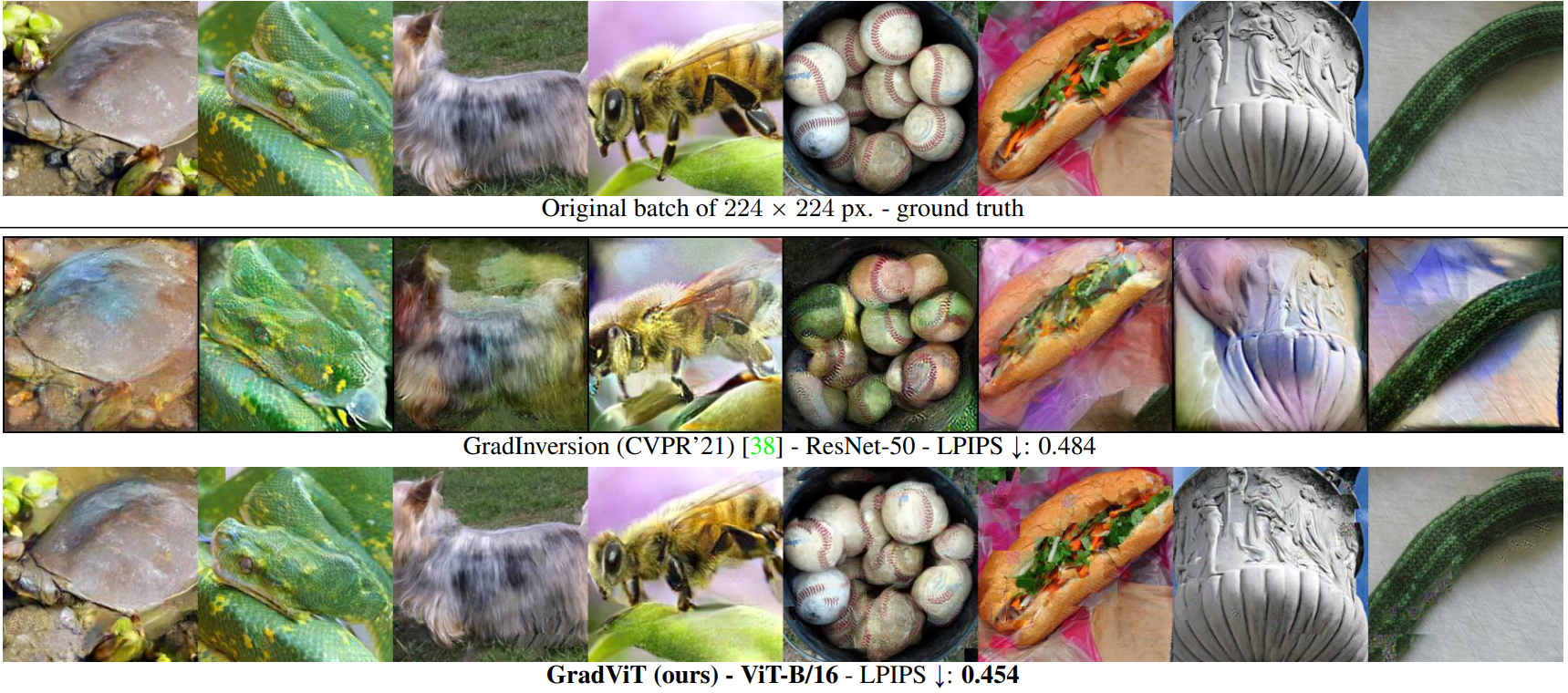

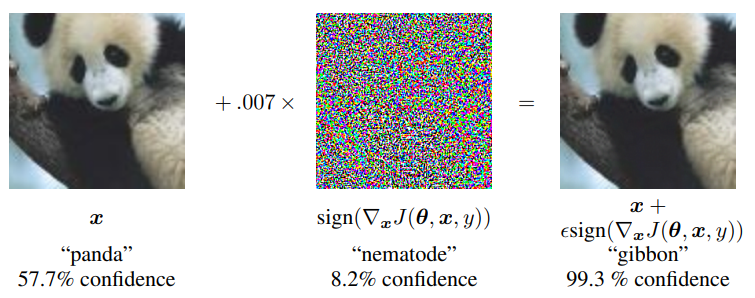

Adversarial attacks on AI models are a fascinating topic. They are the core of AI security research and practice, as seen for example at CVPR ’22. By sprinkling some nigh-invisible magic dust over images, we can make anything look like anything else in the eyes of an AI model. A gibbon classified as a panda, as seen in the famous example in Fig. 1? Sure. Al Pacino being mistaken for Meryl Streep? Why not? Your dog being mistaken for a doubledecker bus? Even that can happen. But why? How is it possible that an otherwise super-accurate AI model suddenly appears to be completely blind?

Of course, there is math behind adversarial attacks that explains exactly what happens. However, as the summer approaches, I thought a lighter read would be more appreciated. This post is my attempt at conveying intuitive understanding of adversarial attacks with a real-world example.

Generally, adversarial attacks can be likened to manipulative behaviour in real life: ensuring a certain favourable outcome for ourselves even if we don’t deserve it based on existing facts and rules. A nice example is provided in the music video for Murder on the Dancefloor by Sophie Ellis-Bextor, in which the protagonist, played by Sophie, wins a dancing competition and it’s not really because she was the best dancer:

Yearly evaluations at Y Corp

To break adversarial attacks down in more detail, let’s use the example of yearly employee evaluations at a fictional company, Y Corp. I know, I know, a dance competition as seen in the video is much more enticing, but that analogy only goes so far. Anyway, back to Y Corp: for the yearly evaluation, every employee writes a report summarizing last year’s work. The report is then evaluated by Malvina, a manager, who recommends a pass/fail mark to the board of the company. For the sake of simplicity, let’s consider a binary case: a pass mark means the employee keeps working for Y Corp, a fail mark means firing the employee. The board of the company then takes the final decision. They have the real power and legal responsibility for the decision, but since there are so many employees and so many other tasks to be done, they mostly confirm whatever Malvina recommends.

So far, the presented story maps to the AI security adversarial attack case as follows:

- The task is yearly employee evaluation: the data inputs are employee work reports, the task output is the pass/fail mark which should fairly judge the employee’s performance.

- Malvina plays the role of the AI model. She is the one that looks at the reports and passes judgment based on the seen data.

- Y Corp’s board plays the role of the human stakeholder of the AI project. They delegate the work, because it would be laborious to do it directly, but they are ultimately responsible for the impact of Malvina’s decisions.

Mr. X goes adversarial

Now, let’s meet Mr. X, the adversarial attacker in our story. Mr. X is one of Y Corp’s employees. His attitude towards work is completely pragmatic: he wants to earn as much money as possible whilst doing as little work as possible. He’s comfortable at Y Corp and he does not want to get fired, as finding a new job is a hassle. Mr. X can be very crafty when it comes to avoiding work. He knows how to look busy, how to manipulate meetings such that little work falls on his shoulders, who to ask for help when the work couldn’t have been avoided. All that matters to him is his objective of maximizing pay whilst minimizing work, so all means towards this objective are fair game.

Normally, Mr. X is cruising along just fine, but this year, he’s in trouble. His results are really bad. If he just reported them, he would surely receive a fail mark. Therefore, he must manipulate the report such that it passes Malvina’s judgment. What can he do?

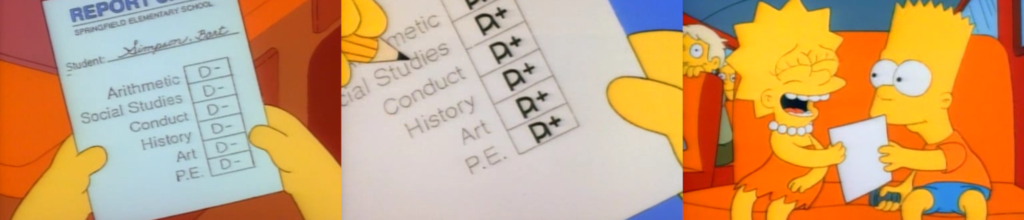

He has to lie, but keep it believable and based on real facts. The work was not stellar, and writing a false report going for the perfect grade would be as silly as Bart Simpson changing all his D- grades to A+ (Fig. 3). The bigger the lies, the higher the chance Malvina or even Y Corp’s board will notice something’s amiss. No, stealth is an adversarial attacker’s best friend. The report shall make the work seem not great, but passable. It should inflate his insignificant contributions so that they appear impressive. He was at 95% of the weekly meetings. Wow, right? Mr. X has to admit that some goals haven’t been achieved to keep it believable, and muddy the waters with respect to the other failures. Some goals apparently haven’t been realistic from the get-go. Some failures are obviously not his fault, but the fault of others. If he doesn’t want to get his hands dirty with blaming others, he can chalk the failure up to “miscommunication”. Some goals have “pivoted”, some had “partial success”…

The possibilities of manipulating the language of the report are endless. Masters of language can even get by without explicit lies. All one needs is to use words to the fullest extent of their meaning, as illustrated by the following favourite quote of mine:

“Elves are wonderful. They provoke wonder.

Terry Pratchett: Lords and Ladies

Elves are marvellous. They cause marvels.

Elves are fantastic. They create fantasies.

Elves are glamorous. They project glamour.

Elves are enchanting. They weave enchantment.

Elves are terrific. They beget terror.

The thing about words is that meanings can twist just like a snake, and if you want to find snakes look for them behind words that have changed their meaning.

No one ever said elves are nice.

Elves are bad.”

If Mr. X knows Malvina very well, he can manipulate her better. He can make sure his gift for her birthday is extra thoughtful. At coffee breaks, he can mention how he loves the same music and roots for the same sports team as her. When writing the report, he can use his knowledge of how Malvina thinks to exploit her blind spots and ensure that the parts she focuses on are iron-clad. This scenario corresponds to a white-box attack: the attacker has good knowledge of how the model works. If their interactions are strictly business-related and he does not know her, then he executes a black-box attack: he has to stick to general, non-personalized manipulations of the report that would work on a general manager-type. From this example it is clear that white-box attacks are easier than black-box attacks, because we simply have more information to go on. This is also true in AI security.

Mr. X carefully considers his options, writes a forged report, and submits it. At a glance, it looks perfectly ordinary, like the other thousands of reports Y Corp received from its employees. It paints a picture of maybe not the highest performer, but a passable employee. Malvina reads it and… gives Mr. X a pass! The board signs Malvina’s decision, and Mr. X’s adversarial attack was successful: the true judgment of his report should’ve been fail, but he received a pass.

Adversarial defense

Mr. X celebrates, but for Y Corp, this outcome is bad. They will be paying Mr. X’s salary for another year without getting adequate work in return. What can they do to prevent subpar employees from passing employee evaluation? This is exactly the question for adversarial defense. In our case, Y Corp can for example do the following:

- Adversarial training: As part of manager training, Y Corp can show Malvina examples of manipulated evaluation reports to make her decisions more robust to manipulations. In AI parlance, “give the model correctly labeled adversarial examples as training data”.

- A committee of managers assigns the marks, instead of just Malvina. Even better: at least some members of the committee are randomly selected. This makes the attack a black-box attack (it is not enough to know Malvina), and increases the pressure on attack transferability: one report (= data input) has to fool multiple managers (= models) at once.

- They can employ an adversarial attack detection expert. The expert’s task would be to raise alarm if a given report seems like it was be manipulated. This report is then checked extra carefully.

There is no single adversarial defense strategy for all tasks, and it is up to Y Corp’s security team to adopt a solid policy that will protect the company’s interests and then actually uphold it. Will Mr. X succeed at employee evaluation next year? Who knows? Y Corp may have a better policy, but Mr. X will also gain one year of experience. It’s an endless cat-and-mouse chase: there are always going to be juicy targets for the attacker to go after, so there will always be a need for security experts to protect them. In my opinion, that is the challenge and beauty of AI security and cybersecurity in general.

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.