Adversarial training: a security workout for AI models

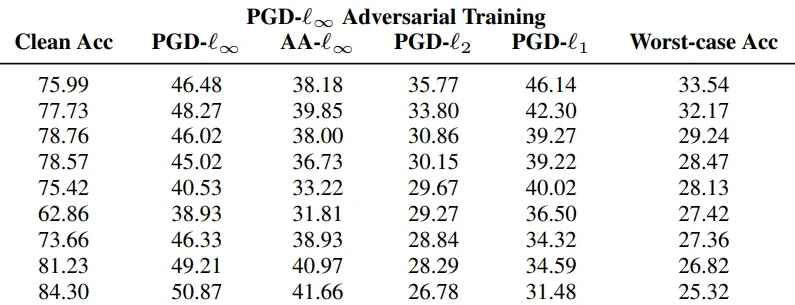

Adversarial training (AT) amends the training data of an AI model to make it more robust. How does AT fare against modern attacks? This post covers AT work presented at CVPR ’23.

Adversarial training (AT) amends the training data of an AI model to make it more robust. How does AT fare against modern attacks? This post covers AT work presented at CVPR ’23.

Adversarial defense is a crucial topic: many attacks exist, and their numbers are surging. This post covers CVPR ’23 work on bolstering model architectures.

A mini-tool for comparison of adversarial defense of various computer vision model architectures, based on the CVPR ’23 work by A. Liu et al.

Physical adversarial attacks fool AI models with physical object modifications, harming real-world AI security. This post covers CVPR ’23 work on the topic.

This website uses cookies so that we can provide you with the best user experience possible. Cookie information is stored in your browser and performs functions such as recognising you when you return to our website and helping our team to understand which sections of the website you find most interesting and useful.

Strictly Necessary Cookie should be enabled at all times so that we can save your preferences for cookie settings.

This website uses Google Analytics to collect anonymous information such as the number of visitors to the site, and the most popular pages.

Keeping this cookie enabled helps us to improve our website.

Please enable Strictly Necessary Cookies first so that we can save your preferences!