Real-world AI security: Physical adversarial attacks research from CVPR ’23

This post is a part of the AI security @ CVPR ’23 series.

Introduction

Physical adversarial attacks are a captivating topic. They modify a real-world object to manipulate a computer vision model deployed “in the wild”. This is where AI security gets real. Without good security, attackers may bypass face recognition models, which may spiral into a serious issue if the given model authenticates people. They can crash autonomous cars. Or they can bypass person detectors, for example those in AI-powered surveillance systems. Physical adversarial attacks are a serious problem, and research on the topic is therefore of utmost importance.

This post covers 5 papers on physical adversarial attacks introduced at CVPR ’23. More AI security papers at CVPR ’23 discuss the physical-world consequences of the methods and techniques they present, but these five revolve around attacks in the physical world fully.

3D face recognition attacks

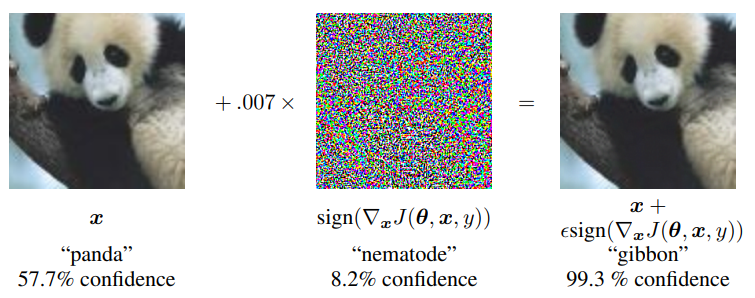

Face recognition models are the classic example of a desirable target for physical adversarial attacks. There are many reasons one might want to bypass face verification and authentication, ranging from legitimate (protecting one’s privacy) to clearly malicious (gaining entry to a private area to steal something). The traditional way to attack face recognition models is using an adversarial patch: sticking a carefully crafted 2D sticker on one’s face to fool the model. Face recognition model designers and vendors are aware of this method. They have ramped up the defense and as a result, modern face recognition methods are resistant to 2D patch attacks. Instead, they perform a 3D scan of the person’s face. To successfully attack modern face recognition models, attackers must attack the 3D face recognition pipeline. And that is exactly the topic of the two works on physical face recognition attacks we have seen at CVPR ’23.

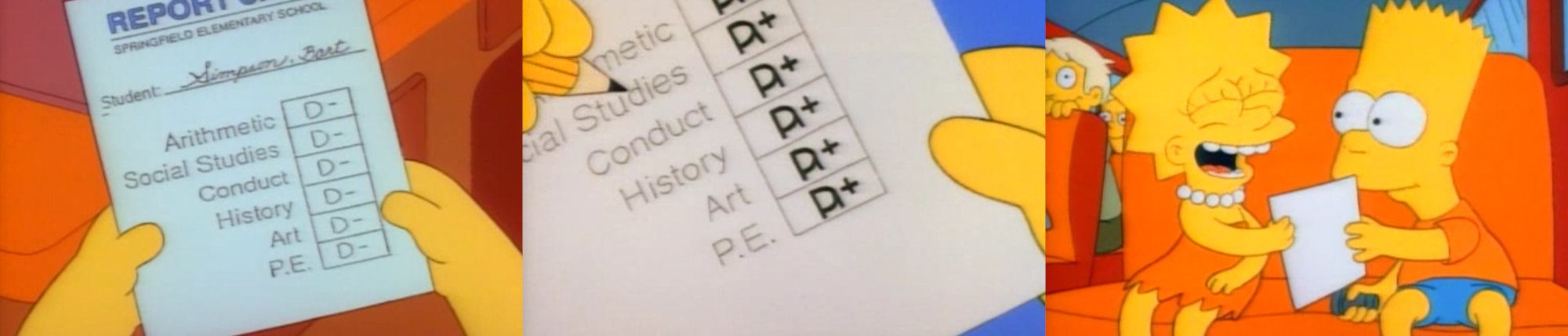

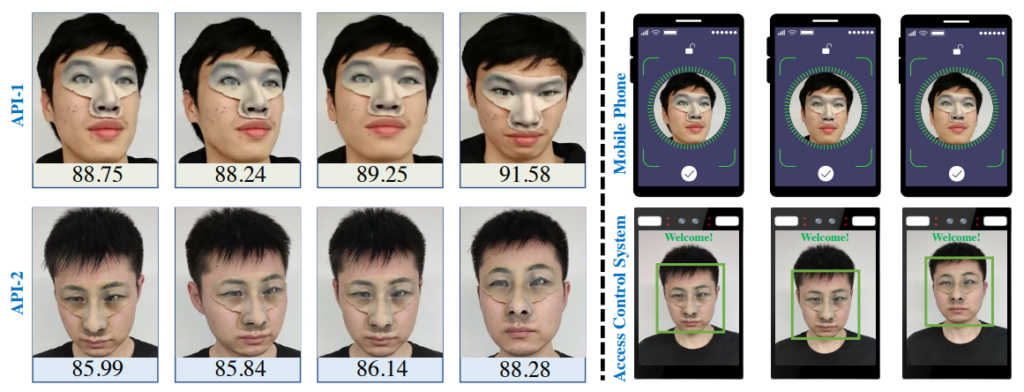

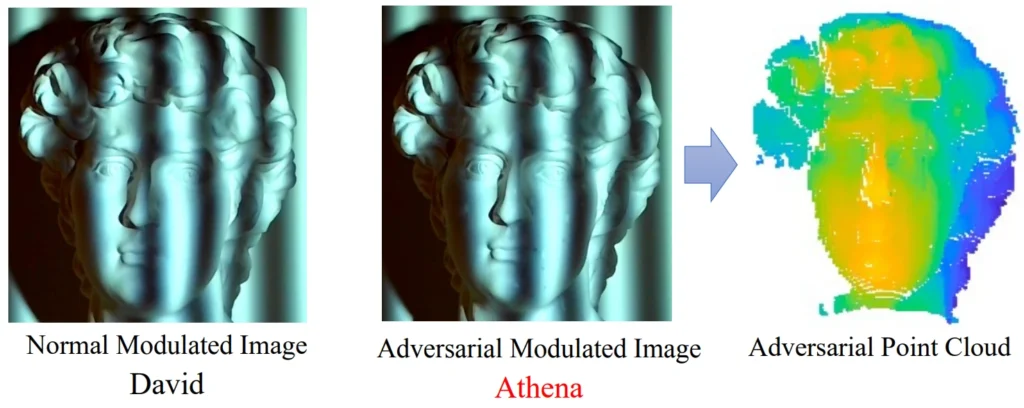

One of the papers is the highlight paper by Yang et al. which I cover in detail in my CVPR ’23 highlights post. Yang et al. create adversarial 3D meshes attached to one’s face (see Fig. 1) that successfully fool a wide variety of commonly-used state-of-the-art face recognition models. They achieve impressive results and conceptually, their method makes perfect sense: modify the 3D structure of the face by adding the mesh. How else would you modify the 3D structure of a face, right? Well, there is a way. Yanjie Li et al. have presented their work on adversarial illumination. By optimally lighting parts of the face and concealing others in shadow, they manipulate the 3D point cloud of the presented face. This in turn manipulates the overall output of the model. The concept is illustrated in Fig. 2, and an example of a real-world setup is given in Fig. 3. I like the idea of using light and shadow in physical adversarial attacks: a shadow attack can be switched on/off without a trace, the manufacturing cost is low… In this particular case, I can imagine a bit more portable setup than the one depicted in Fig. 3 could be more practical, but I still like the idea a lot.

Evaluating stealth

Evaluation is a core component of any proper scientific and engineering work. In AI security, the king of evaluation metrics that accompanies virtually every paper is the attack success rate (ASR), the percentage of successful attacks launched against the model. Attacks seek to maximize this number, defense methods aim to minimize it. ASR is a great evaluation metric: it’s clear what it means, it’s undeniably a measure of success, and it’s easy to compare methods based on ASR. Jackpot. Except, it may not cover 100% of the desirable characteristics of an attack. The paper by S. Li et al. argues that most existing successful physical attacks are not stealthy at all. Adversarial patches, textures, and camouflages are usually conspicuous and recognizable. The attacker runs a serious risk of being noticed and possibly deterred from the attack before they reach the target. This is clearly an attack failure, but it can never be represented in ASR, as ASR only counts actual model encounters with adversarially manipulated inputs.

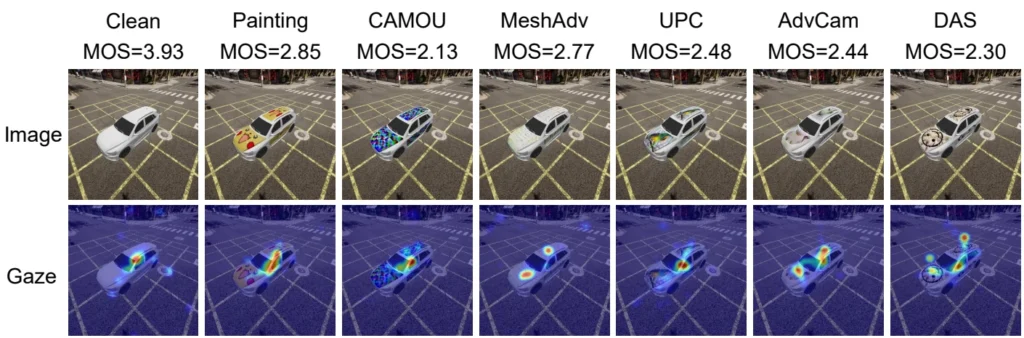

S. Li et al. propose a method to automatically evaluate adversarial attack “naturalness” (=stealthiness), focusing on the domain autonomous driving. Their first contribution is the Physical Attack Naturalness (PAN) dataset: 2688 generated car images with/without adversarial patterns, employed human reviewers and asked them to judge the naturalness of the images, and appended the collected eye gaze tracking & naturalness opinion score. Fig. 4 depicts examples of data in the PAN dataset. The authors propose to use PAN as training data for a dual prior alignment (DPA) network that emulates a human observer. When trained, the DPA network automatically assigns “natural/not natural” labels to image inputs and therefore serves as a “stealth check” for attacks. This approach removes the need for having a human observer committee on hand for every attack development, which would obviously be very costly and inefficient. I think this is an important first step towards better evaluation of physical adversarial attacks, and I would like to see more work on the topic. In particular, generalization to more domains beyond autonomous driving and more intuition into how to interpret the results from a designer perspective.

If looks could kill… detectors

Person detectors are another classic physical adversarial attack target domain, and CVPR ’23 has seen two works on the topic. Hu et al. have presented a new adversarial camouflage to be worn on clothes that evades person detectors. This is the continuation of the work by a similar author collective from CVPR ’22, covered in an earlier blog post of mine. The CVPR ’22 work has introduced the adversarial camouflage as a concept, this year’s work has focused on stealthiness: making the camouflage much less flashy and make it blend in with people wearing regular clothes. Fig. 5 displays the difference between previous adversarial patch/pattern approaches and the adversarial camouflage introduced at CVPR ’23. I think the authors did succeed in making the patterns stand out less in the crowd. Onwards to smaller adversarial fashion accessories!

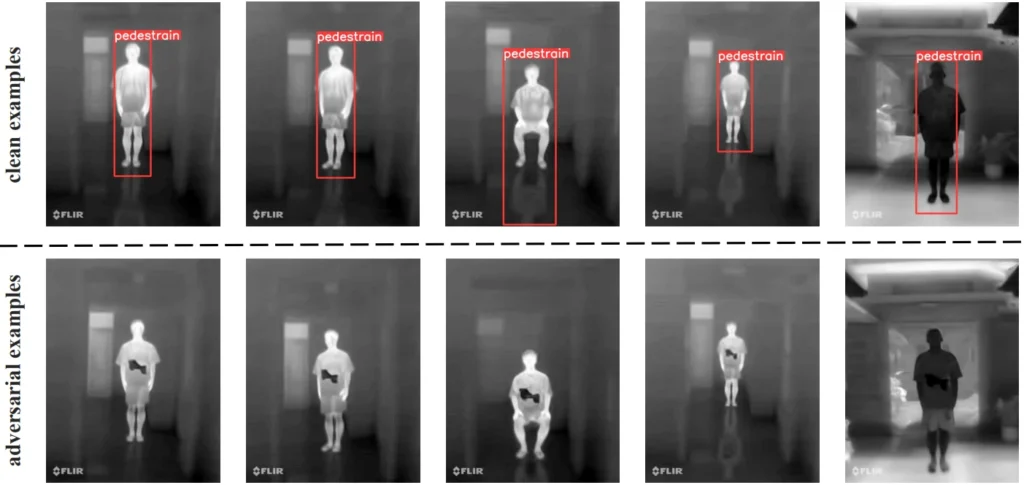

Person detectors are often equipped with IR detectors that help bolster the model’s defenses against physical adversarial attacks. They ignore the visual color information altogether, focusing on a human’s heat signature, which is difficult to conceal. Being covered in thermal insulation from head to toe is obviously a rather impractical way to defeat IR detectors X. Wei et al. therefore focus on adversarial IR patches cut out from a thermal insulation material (aerogel) that cover a small part of the attacker’s body, yet still manage to fool a detector. The success of the concept is demonstrated in Fig. 6. I like the minimalistic nature of the attack, and I think combining it with the method of Zhu et al. from CVPR ’22 (covered in the physical adversarial attacks @ CVPR ’22 post) could yield quite interesting results.

List of papers

- Hu et al.: Physically Realizable Natural-Looking Clothing Textures Evade Person Detectors via 3D Modeling

- S. Li et al.: Towards Benchmarking and Assessing Visual Naturalness of Physical World Adversarial Attacks

- Yanjie Li et al.: Physical-World Optical Adversarial Attacks on 3D Face Recognition

- X. Wei et al.: Physically Adversarial Infrared Patches With Learnable Shapes and Locations

- Yang et al.: Towards Effective Adversarial Textured 3D Meshes on Physical Face Recognition

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.