AI security @ CVPR ’23: Honza’s highlights & conclusion

This post presents “Honza’s highlights”—CVPR ’23 AI security papers that are worthy of your attention and have not received the official highlight status—and conclusions from CVPR ’23.

This post presents “Honza’s highlights”—CVPR ’23 AI security papers that are worthy of your attention and have not received the official highlight status—and conclusions from CVPR ’23.

Deepfakes & image manipulation are increasingly used for spreading fake news or falsely incriminating people, presenting a security and privacy threat. This post summarizes CVPR ’23 work on the topic.

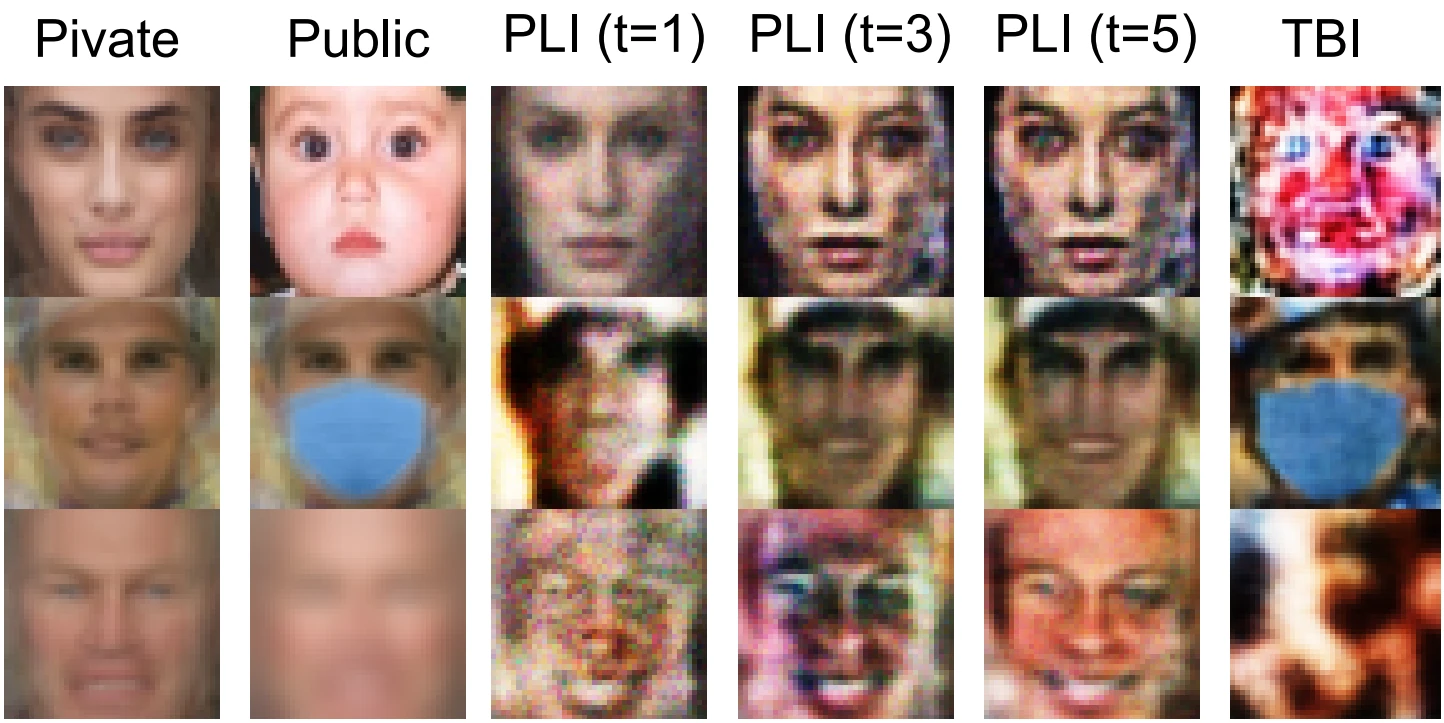

This post summarizes CVPR ’23 work on privacy attacks that threaten to steal an AI model (model stealing) or its training data (model inversion).

Backdoor (or Trojan) attacks poison an AI model during training, essentially giving attackers the keys. This post summarizes CVPR ’23 research backdoor attacks and defense.

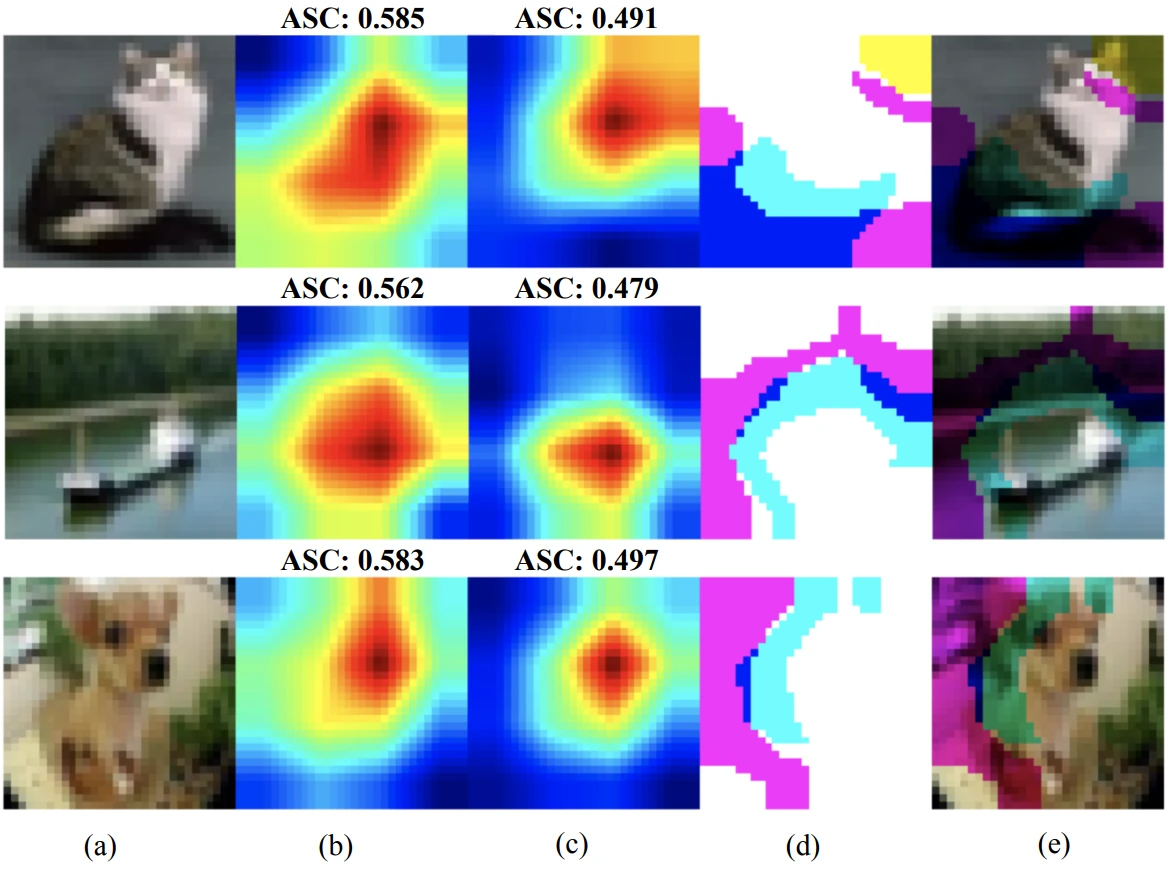

Certifiable security (CS) gives security guarantees to AI models, which is highly desirable for practical AI applications. Learn about CS work at CVPR ’23 in this post.

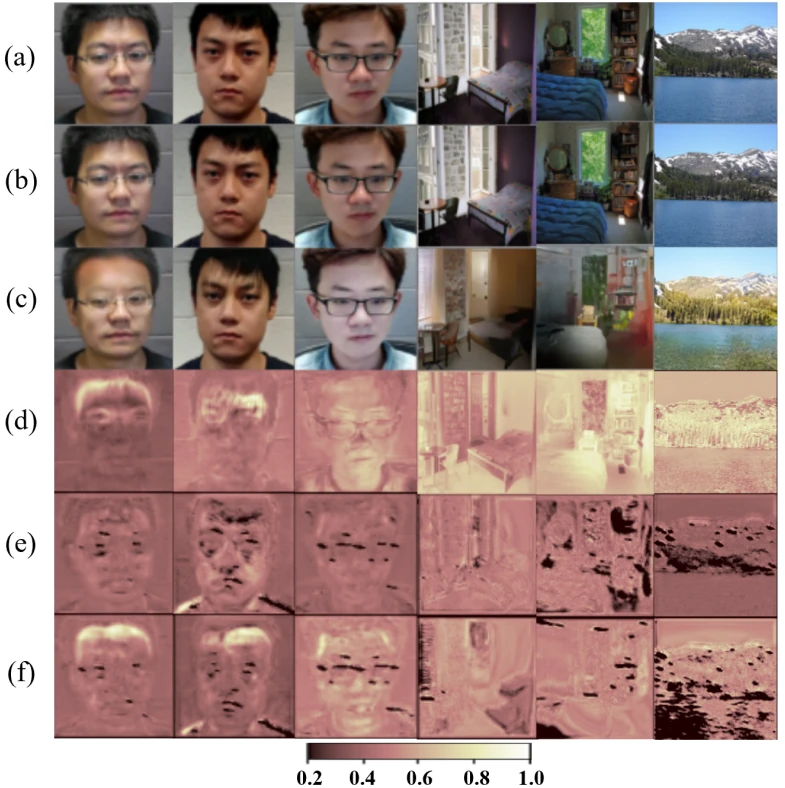

Data inspection is a promising adversarial defense technique. Inspecting the data properly can reveal and even remove adversarial attacks. This post summarizes data inspection work from CVPR ’23.

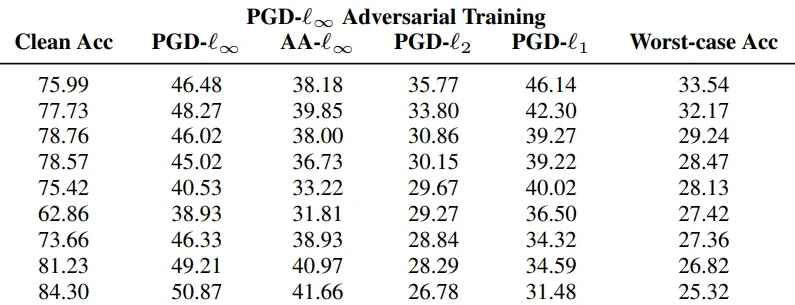

Adversarial training (AT) amends the training data of an AI model to make it more robust. How does AT fare against modern attacks? This post covers AT work presented at CVPR ’23.

Adversarial defense is a crucial topic: many attacks exist, and their numbers are surging. This post covers CVPR ’23 work on bolstering model architectures.

A mini-tool for comparison of adversarial defense of various computer vision model architectures, based on the CVPR ’23 work by A. Liu et al.

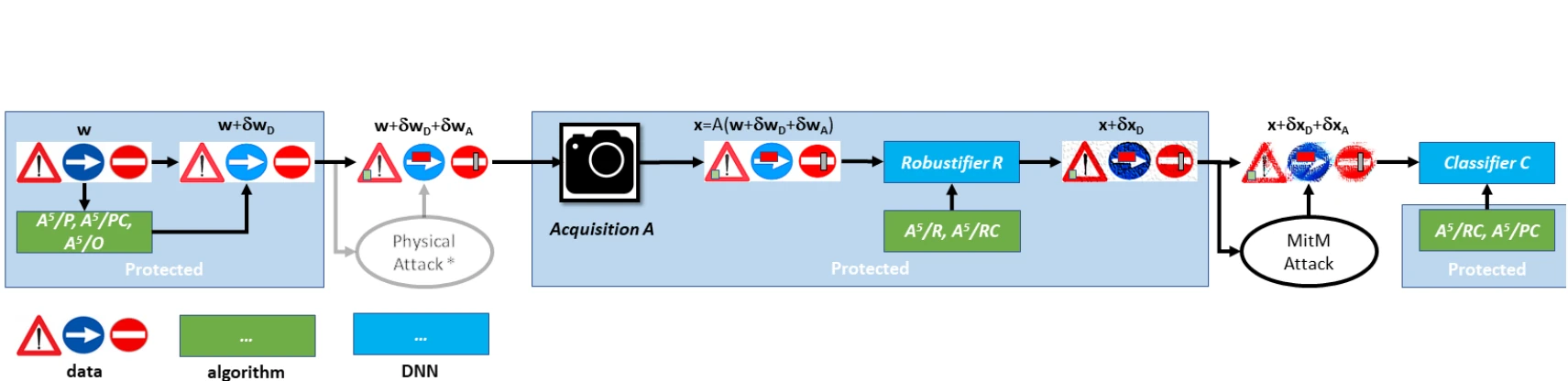

Physical adversarial attacks fool AI models with physical object modifications, harming real-world AI security. This post covers CVPR ’23 work on the topic.