AI security @ CVPR ’23: Honza’s highlights & conclusion

Introduction

With this post, the AI security @ CVPR ’23 series is finally over! In my previous posts, I have covered all 75 AI security papers that appeared at the conference. I am also happy that I kept my promise and that the full post series is complete before CVPR ’24 submission deadline. If you are a researcher that aims to submit this year, I hope this has helped you.

In this post, I award my personal Honza’s highlights to 2 papers that IMHO deserve your attention and have not received the official CVPR highlight distinction. After that, I present a brief overall conclusion.

I have selected the personal highlights using the following criteria:

- Novelty — All papers accepted at CVPR cover novel work, but in my opinion, highlight papers should feature an idea that advances the state of the art significantly. It should be an idea that takes AI security in new directions not explored before.

- Readability — The idea is presented in a clear, concise manner, the paper is pleasant to read.

- Code — I am convinced that in general, AI papers should come with code, otherwise they are of limited use.

And if you’re scratching your head why it’s Honza’s highlights: I am Czech, and in our culture, pretty much every Jan is called Honza by friends, and I am no exception. I think this informal, friendly version of my name matches the informal nature of personal highlights.

Honza’s highlight: Segmentation attack

Paper: Rony et al.: Proximal Splitting Adversarial Attack for Semantic Segmentation

Paper code: https://github.com/jeromerony/alma_prox_segmentation

Covered in: New adversarial attacks on computer vision from CVPR ’23

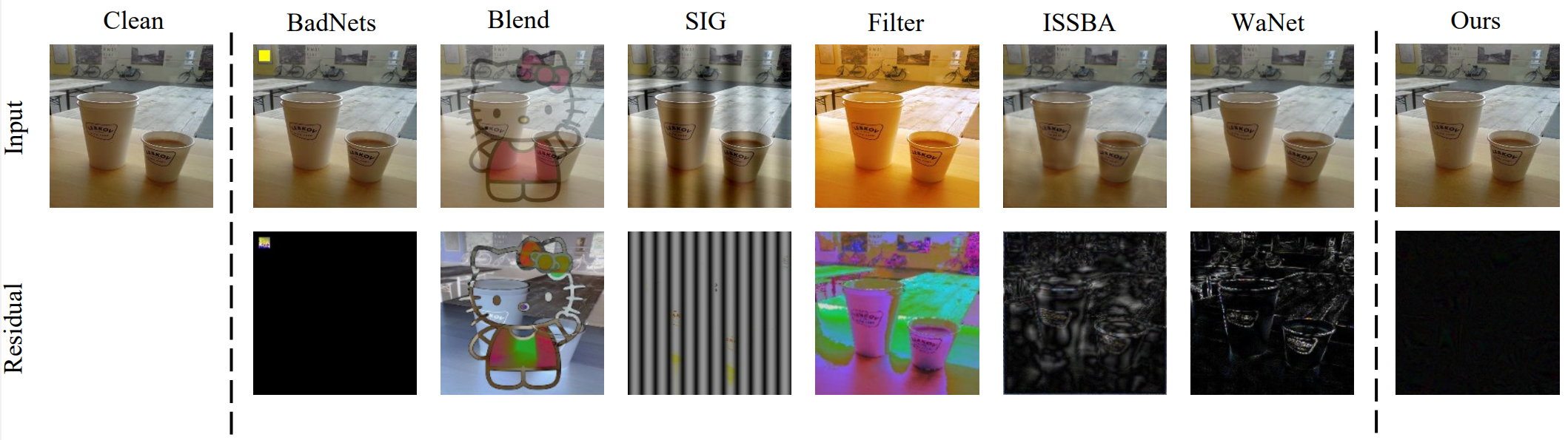

Image segmentation is a core computer vision task, yet attacks on it receive comparatively much less research attention than attacks on other core tasks, especially classification. There are two fundamental reasons for that: attacking semantic segmentation is computationally expensive and difficult to evaluate. The paper by Rony et al. has impressed me because it overcomes both challenges at the same time. The paper presents ALMA prox, a novel attack that checks all red team boxes: it is computationally efficient, performs well, and is stealthy too (see Fig. 1). To overcome the evaluation challenge, the paper proposes to evaluate semantic segmentation attacks using the novel attack pixel success rate (APSR). I think Rony et al. have done a lot of heavy lifting for enabling AI security work on semantic segmentation, therefore this is certainly a paper worthy of attention.

Honza’s highlight: Defensive augmentation

Paper: Frosio & Kautz: The Best Defense Is a Good Offense: Adversarial Augmentation Against Adversarial Attacks

Paper code: https://github.com/NVlabs/A5

Covered in: From “maybe” to “absolutely sure”: Certifiable security at CVPR ’23

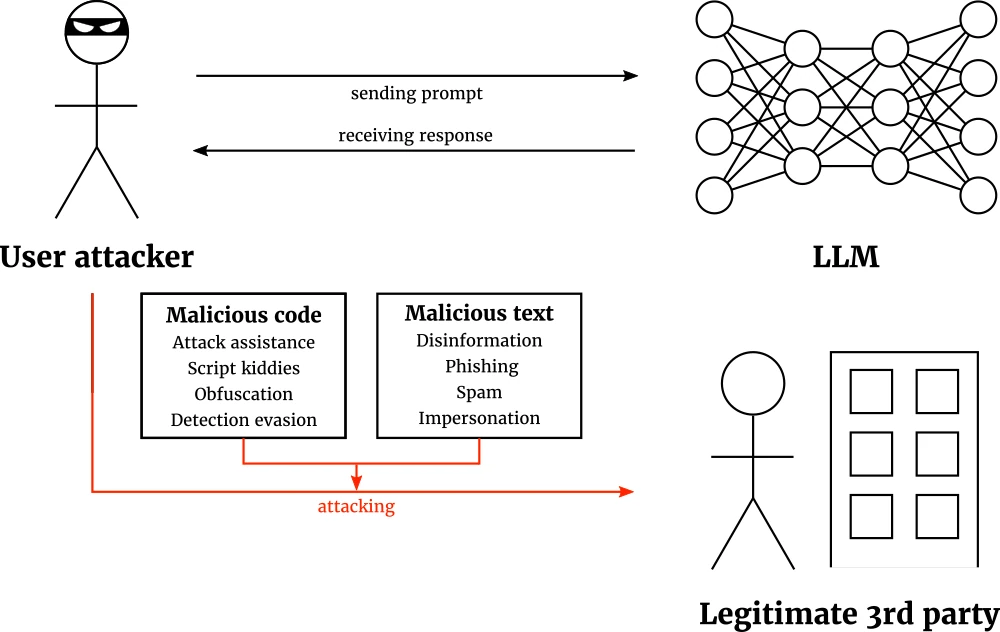

This paper is a great example of work that provides a gamechanger idea. In particular, this paper by Frosio & Kautz contributes to certifiable defense (CD). CD is a hard problem, it is way more difficult to guarantee safety than to provide an empirical safety threshold. The reward, however, would be opening the doors for AI in high-stakes disciplines such as aerospace engineering or robot-assistive surgery. The gamechanger here is grabbing the first-strike advantage from the attacker. Generally, defending a model means reacting to inputs. The attacker chooses when, where, and how to strike. The A5 framework by Frosio & Kautz snatches this advantage by preemptively augmenting the data we want to protect. Fig. 2 depicts the full pipeline, and the main result of the paper is that A5 is the first certifiable defense method for physical adversarial attacks. Interesting paper, I can definitely recommend it to you.

Conclusions

Overall, I thoroughly enjoyed the AI security papers from CVPR ’23. The vast majority of the papers read well, and I like the newly explored research directions. I think it was very interesting that while CVPR ’22 felt like a “red” conference, CVPR ’23 was “purple” (red + blue). Indeed, at CVPR ’22, most works focused on presenting a new attack. At CVPR ’23, we have seen a nice mix of attack and defense paper. In my opinion, this is a sign of maturity the AI security field: we have a diverse set of efficient attacks, now we need robust defenses too.

I am also quite pleased to see that the volume of AI security papers at CVPR keeps rising, as I have shown in the intro post to the series. The area covered by AI security is steadily expanding, the attack possibilities on the one hand and the defensive toolbox on the other are growing larger and more diverse every year. I expect this volume trend to continue. We are still in the early years of AI security. As the adoption of AI increases, the topic will keep gaining traction.

Thanks for reading my AI security & CVPR ’23 series, good luck if you’re submitting to CVPR ’24, and let’s see what the future holds!

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.