Cheatsheet of AI security papers from CVPR ’22

All AI security papers from CVPR ’22 with paper link, categorized by attack type.

All AI security papers from CVPR ’22 with paper link, categorized by attack type.

Image manipulation is an attack that alters images to change their meaning, create false narratives, or forge evidence. This post summarizes AI security work on this topic presented at CVPR ’22.

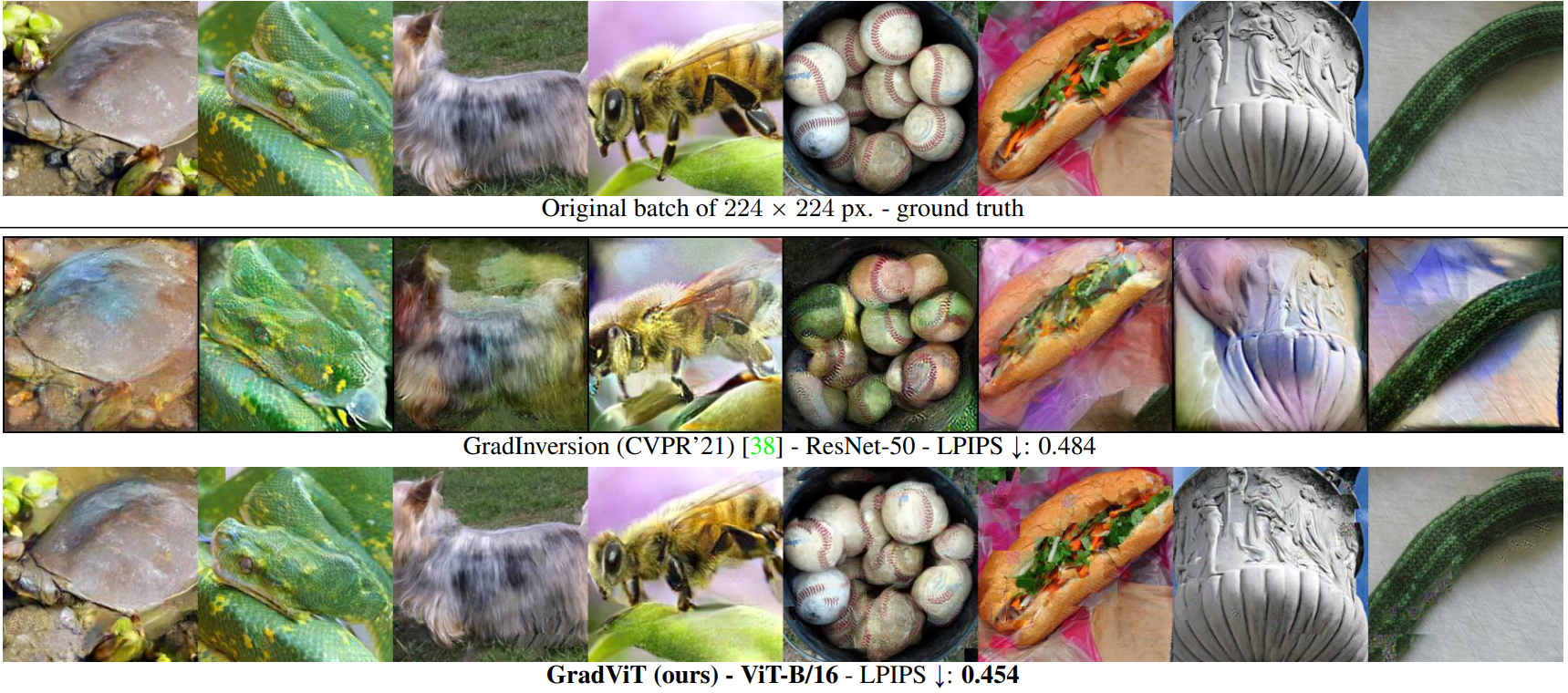

One of the key AI security tasks is protecting data privacy. A model inversion attack can steal training data directly from a trained model, which should be prevented. This post covers CVPR ’22 work on model inversion attacks.

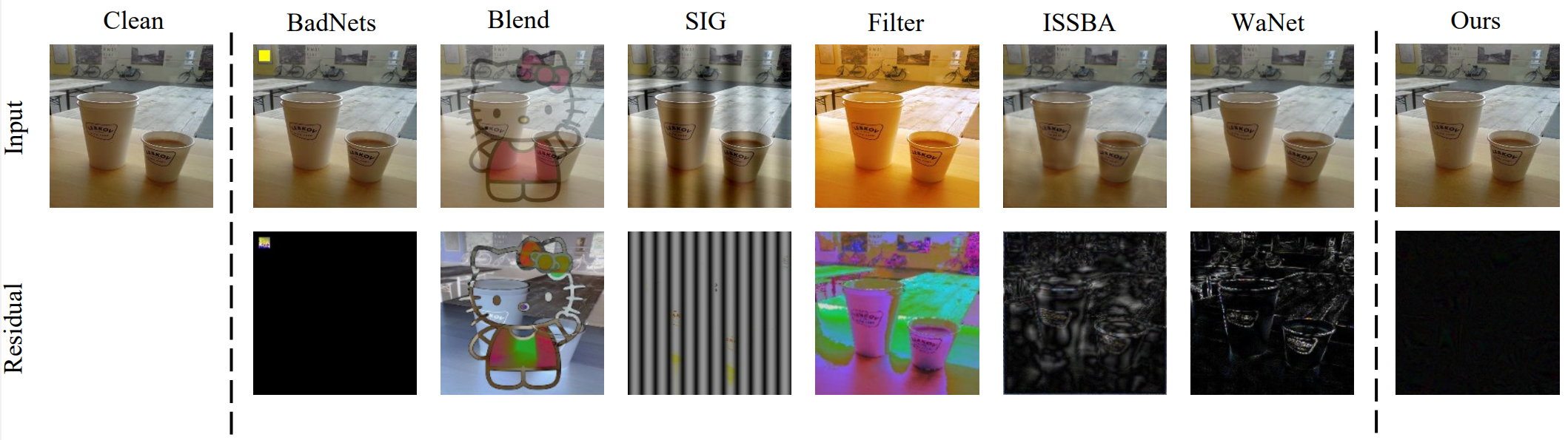

A backdoor or Trojan attack compromises the model to produce outputs desired by the attacker when an input is manipulated in a certain way. Backdoor attacks give attackers a permanent unauthorized security pass, which poses a great AI security risk. In this post, I cover backdoor attacks research at CVPR ’22.

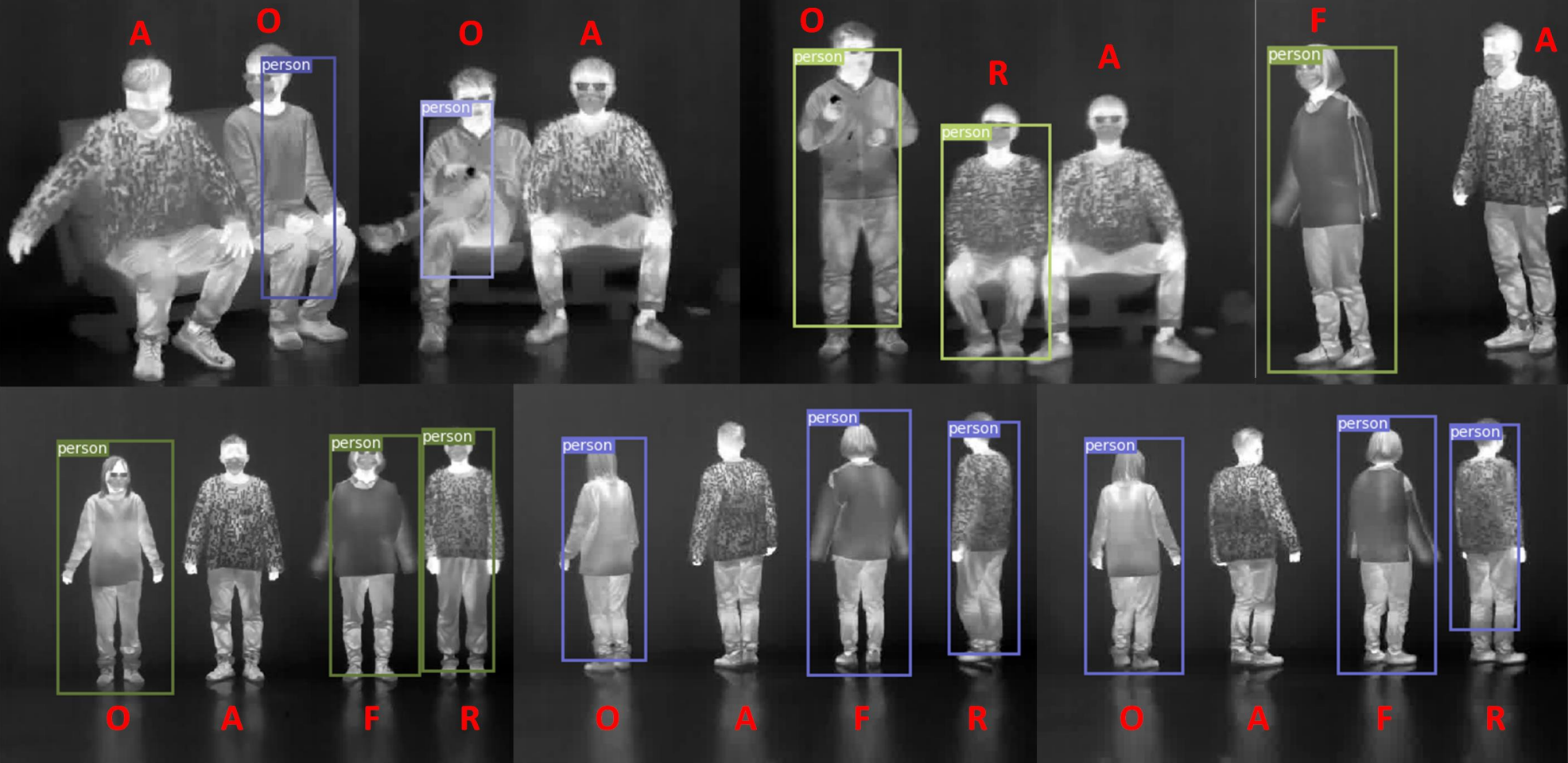

This post is a part of the AI security at CVPR ’22 series. Physical adversarial attacks At a glance, attacks on computer vision models might not sound so serious. Sure, somebody’s visual data processing pipeline might be compromised, and if that’s a criminal matter, let the courts decide. Big deal, it doesn’t really impact me that…

One of the most interesting aspects of cybersecurity is the diversity of attack vectors, or paths through which an attack can succeed. How does AI security fare in this regard? This post focuses on the diversity of attacks on computer vision models.

This post is a part of the AI security at CVPR ’22 series. The issue of CV and AI security can feel quite scary. Stronger and more sophisticated attacks keep coming. Defense efforts are a race that must be run, but cannot be definitively won. We patch the holes in our model, then better attacks…

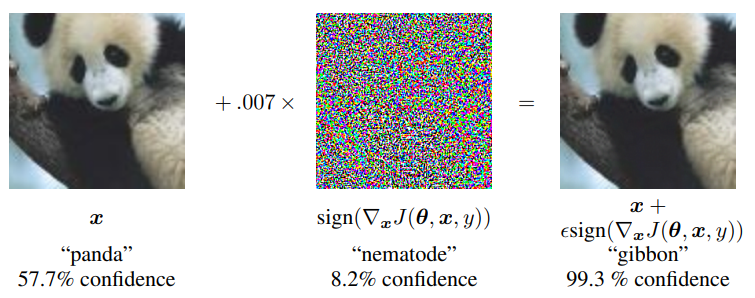

This blog post is a part of the AI & security at CVPR ’22 series. Here I cover the adversarial attack terminology and research on classic adversarial attacks. Terminology and state of the art The cornerstone of AI & security research, and indeed the classic CV attack, is the adversarial attack, first presented in the…

Computer vision (CV) is one of the vanguards of AI, and its importance is rapidly surging. For example, CV models perform personal identity verification, assist physicians in diagnosis, enable self-driving vehicles. There has been a remarkable increase in performance of CV models, dispelling much of the doubt related to their efficiency. With their increased involvement…