AI security @ CVPR ’22: Image manipulation & deepfake detection research

This post is a part of the AI security at CVPR ’22 series.

Image manipulation & deepfake detection

Image manipulation is an attack that alters images to change their meaning, create false narratives, or forge evidence. Image manipulation is centuries old, far predating computer vision. Fig. 1 displays a famous example of photograph manipulation by one of the early adopters of the attack, the Soviet communists. The real photograph on the left depicts, from left to right, the Soviet Foreign Minister Molotov, the Soviet dictator Stalin, and the NKVD (secret police) chief Yezhov who oversaw the brutal Great Purge in the 1930s. Once Yezhov outlived his usefulness, he himself was executed. Subsequently, Soviet propaganda took steps to erase Yezhov from collective memory, resulting in the doctored photo on the right—the once-powerful man who is clearly visible in the image on the left vanishes completely.

Decades ago, doctoring images involved quite some manual labour. Nowadays, with generative AI, it’s not so difficult. Generative adversarial networks (GANs) are able to create convincing deepfakes: images and video footage documenting events that never happened. Deepfakes have their genuine uses, for example in advertising, but they are perhaps more known for the malicious use cases: impersonating real people and delivering misleading or harmful messages on their behalf, or placing them in events they never partook in.

Fig. 2 depicts a case from 2020. Every year, the late Queen Elizabeth II broadcasted her Christmas message to the nations of the Commonwealth. In 2020, two speeches were broadcast. The genuine speech on BBC, and a deepfake on Channel 4. The deepfake’s content was tongue-in-cheek, and as the video goes on, it is more and more obvious that something is amiss—after all, Channel 4’s intent was not to deceive, but to raise awareness about deepfakes and to make people doublecheck their sources of information. Nevertheless, this created some outrage and also fear: if it’s so simple to convincingly emulate such a familiar person, what and who can we trust? By the way, can you guess which queen in Fig. 2 is the real one? Pause here if you want to guess and read on when you are ready. The answer: the left image is of the real queen, the right is from the deepfake.

The Christmas address case happened almost 3 years ago, in the meantime, the deepfake technology has improved significantly. Nowadays, the deepfakes are more convincing and we are able to generate them fully from scratch. In 2020, Channel 4 still had to project a deepfake face on a real actress in a studio. At CVPR ’22, there were 6 papers on image manipulation and deepfake detection.

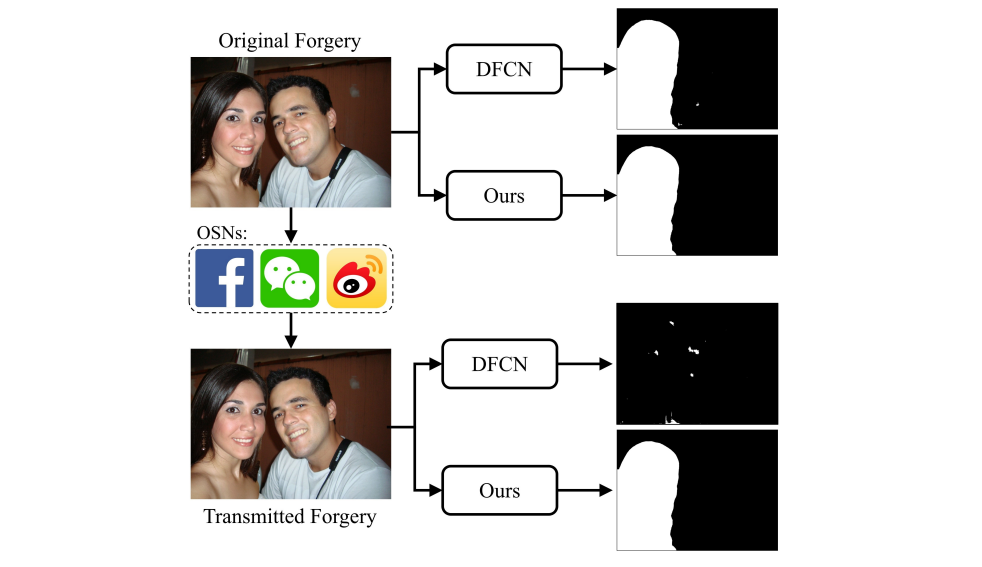

Image manipulation

Much of the image manipulation happens on online social networks, as they are the ideal place to create false narratives and spread misinformation. Anyone can upload pretty much what they want, provided it passes any existing content filters. With a compelling, emotion-stirring caption and enough following, a social network post can spread like wildfire. Detecting image manipulation in social network images is therefore important to counter misinformation. However, Wu et al. note that the social networks themselves unwittingly make the effort more difficult. To make image storage and retrieval more efficient, they use lossy compression, which can conceal a forgery that would be detected in the original image (see Fig. 3). Wu et al. propose a robust method that detects manipulations even on images that underwent a social-network-like lossy compression.

Asnani et al. propose a proactive manipulation detection scheme: instead of just judging whether the input image is fake or not, we safeguard the image’s authenticity from its creation by adding a certain signal to it. This signal, which is similar in concept to a hash or a signature, is called template by the authors. An authentication model then verifies the template: if the image is fake, then the template is mangled, which is easy to detect. The authors propose robust templates that could eventually be added to an image directly by the camera for increased security.

Deepfake detection

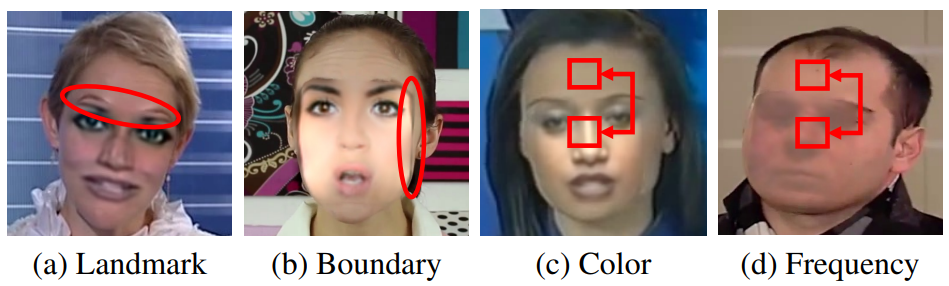

One of the major techniques involved in deepfake face forgery is blending: combining a clean image with a reference face to create an adversarial deepfake example. Since blending is not present in clean images, detecting blending means we can detect deepfakes. Fig. 4 shows an overview of blending visual artifacts, typical for deepfake-forged faces. Chen et al. propose a detection model that predicts not only whether the image is a deepfake or not, but also the blending configuration used if fake (blended region, blending method, blend ratio). This richer information can be used to generate more challenging examples, which in turn improve deepfake resilience of our model through adversarial training. To further increase deepfake robustness, Shiohara & Yamasaki propose a simple, yet effective idea: use self-blended images, i.e., images of the same person blended together, for adversarial deepfake forgery training. Blending images of the same person generally results in more subtle blending, and in turn in more challenging adversarial training examples.

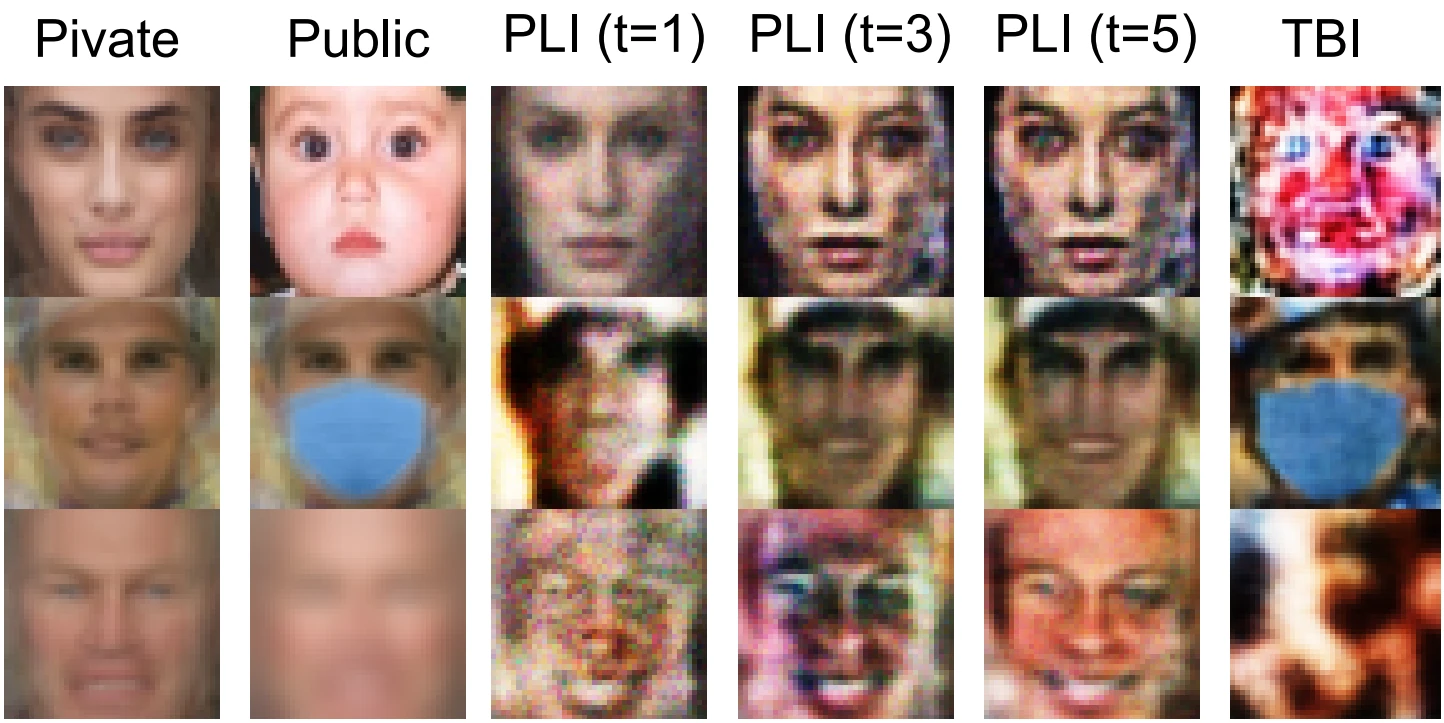

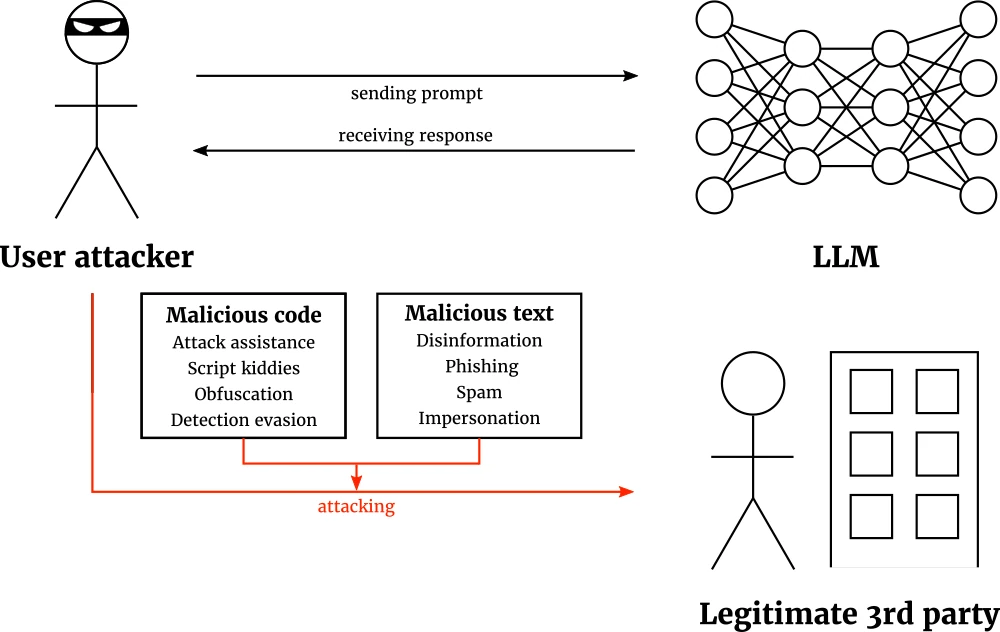

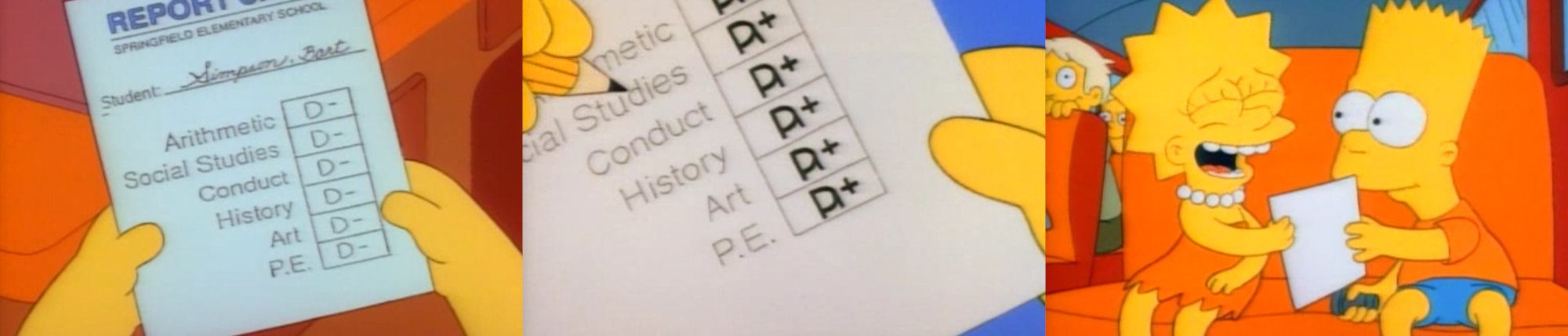

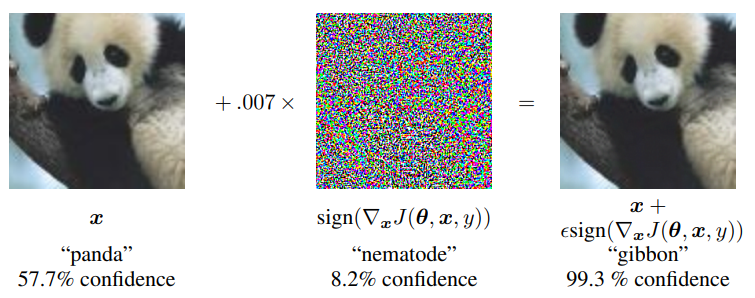

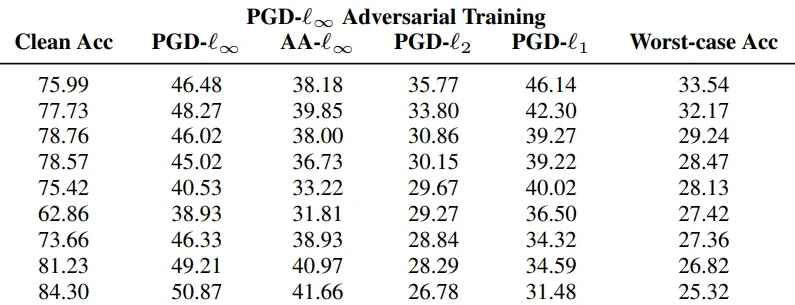

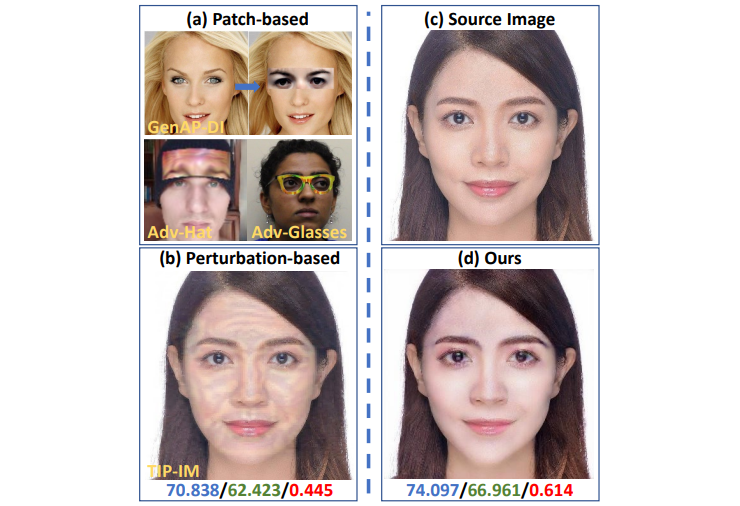

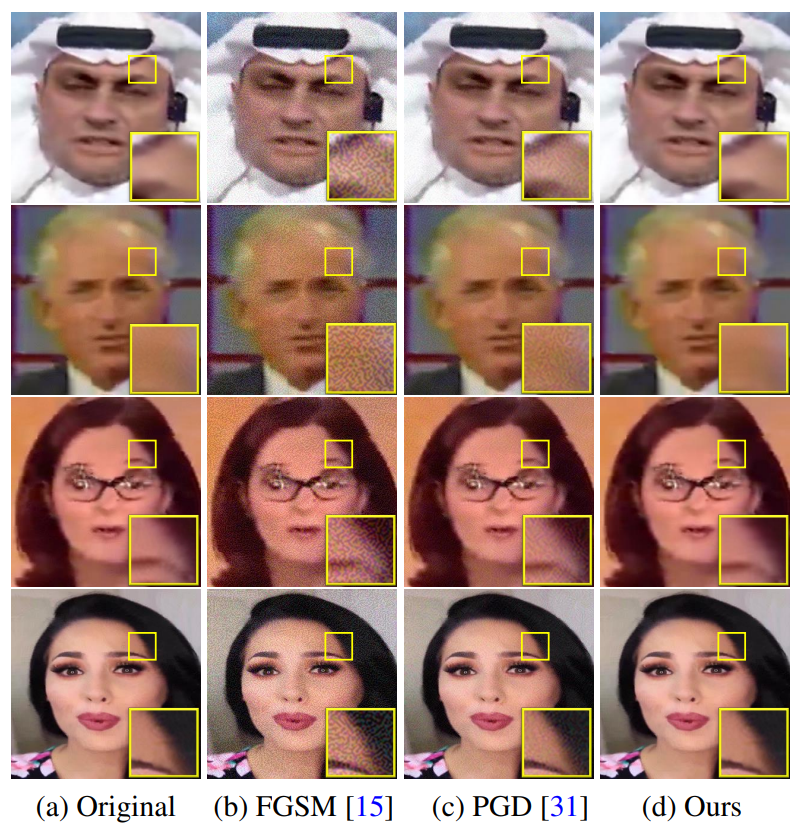

Finally, I’d like to point out that at times, it is desirable to be able to fool face detectors: they’re OK at entry points to private spaces, but not so great if used by a Big-Brother-like entity. Fig. 5 provides an overview of face forgeries, deepfake or not, used to fool face detectors. In addition to adversarial patches & wearables and perturbation-based attack, one can employ adversarial makeup. Hu et al. propose an adversarial makeup generator with more realistic-looking images than the state of the art. Adversarial makeup has the advantage of stealth: if executed well, the forged image is less conspicuous than perturbation-based and patch-based forgeries. Jia et al. utilize frequency-based adversarial attacks to train a forged face generator with superior image quality to the classic adversarial attack methods such as fast gradient sign method (FGSM) or projected gradient descent (PGD). Fig. 6 shows the results achieved by this method.

List of papers

- Asnani et al.: Proactive Image Manipulation Detection

- Chen et al.: Self-supervised Learning of Adversarial Example: Towards Good Generalizations for Deepfake Detection

- Hu et al.: Protecting Facial Privacy: Generating Adversarial Identity Masks via Style-robust Makeup Transfer

- Jia et al.: Exploring Frequency Adversarial Attacks for Face Forgery Detection

- Shiohara & Yamasaki: Detecting Deepfakes with Self-Blended Images

- Wu et al.: Robust Image Forgery Detection Over Online Social Network Shared Images

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.