Are there guarantees in AI security? Certifiable defense research @ CVPR ’22

This post is a part of the AI security at CVPR ’22 series.

The issue of CV and AI security can feel quite scary. Stronger and more sophisticated attacks keep coming. Defense efforts are a race that must be run, but cannot be definitively won. We patch the holes in our model, then better attacks poke other holes in our defenses… rinse and repeat. Is it possible to escape this vicious circle, if only for a bit? Can computer vision models guarantee their security, or at least a portion of it?

Certifiable defense

Yes! This is exactly what certifiable defense (CD) aims for. For each input a CD model receives, it outputs its normal output as expected by its task (e.g., a class label), and a decision on robustness certainty. The robustness certainty has two possible outcomes: uncertain or robust. If robust, then the model guarantees its decision could not have been manipulated by an attack. The robustness guarantee is supported by a mathematical proof, it is therefore not possible for the model to declare the decision robust whilst it was actually manipulated by an attack. If uncertain, then the model cannot rule out the possibility of an attack. The strength of the robustness guarantee is the main strength of CD models, as it makes them very appealing for domains with high security stakes, such as air traffic control. By rejecting all input that receives the uncertain label, we can be 100% sure we are not letting attackers through.

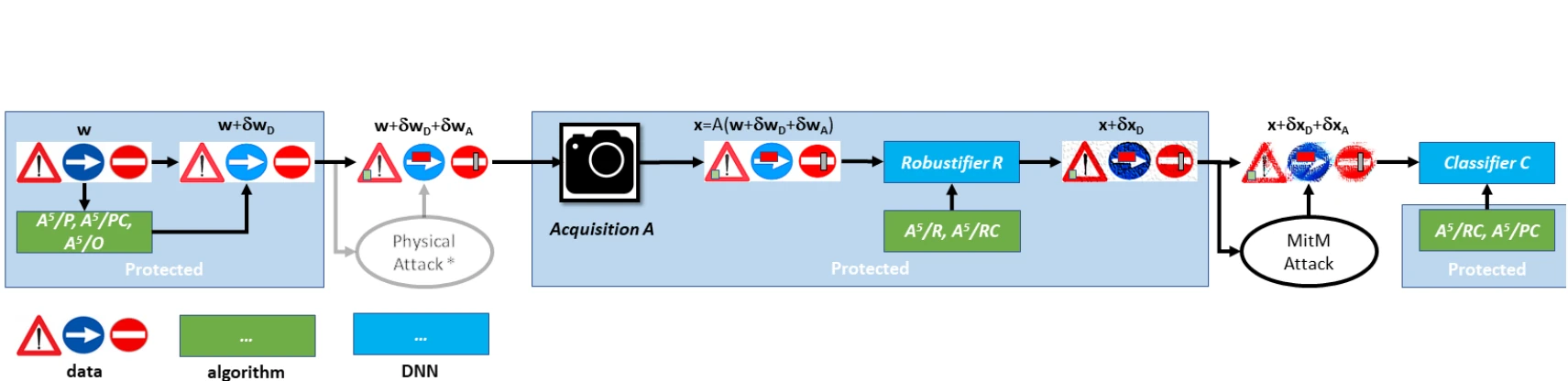

The certifiable defense work at CVPR ’22 focuses on adversarial patch defense. In this type of attack, the attacker crafts a small adversarial patch (example in Fig. 1) and stamps it onto a clean image to trick the model. At a glance, the patches may be more conspicuous than the classic pixel perturbation attack, but they translate well to the real world. For example, a person wearing an adversarial patch sticker may bypass face detection, or stop signs can be stamped such that self-driving cars ignore them.

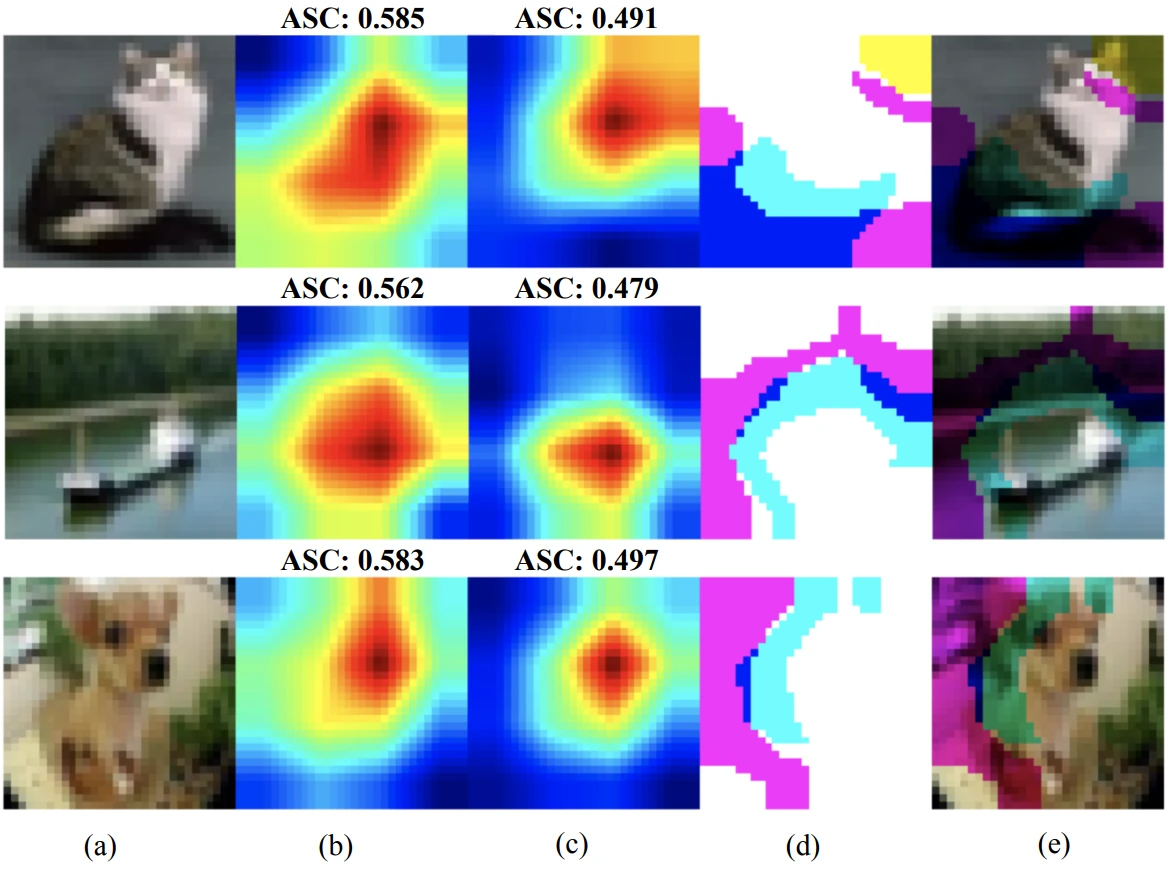

There are 2 papers on the topic at CVPR ’22 by Chen et al. and Salman et al. Both work with vision transformer models and both certify robustness using derandomized smoothing on image ablations, a method introduced by Levine & Feizi in their NeurIPS ’20 paper. Image ablations are illustrated in Fig. 2: essentially, the smooth model slides a column or rectangular window of given pixel size across the image to create the ablations. Each ablation is classified separately. Each ablation class label is one vote, and the smooth model classifies the entire image into the class that received the most votes.

So, how to certify robustness? With derandomized smoothing, an adversarial patch of a given maximum size can only corrupt a limited maximum number of ablations, as it will be blacked out in the rest. The worst-case scenario is that the adversarial patch manipulates the class label of all ablations it intersects into the second-highest voted class. Therefore, if (and only if) this adversarially inflated number of votes is still lower than the number of the votes for the top class, then the model declares the decision robust—no matter how hard the attacker tries, the adversarial patch cannot possibly to influence the smooth model’s decision. If the artificially inflated number of votes for the second-highest voted class bumps it above the top class, the robustness is uncertain and no guarantee is given.

The derandomized smoothing defense guarantee has several advantages. It works regardless of the position of the patch, only the patch size matters. In general, attackers want the patches to be as small as possible to evade detection by visual inspection of the image. But small patches make the derandomized smoothing defense stronger, because the number of potentially corrupted ablations is also lower, and therefore the required margin between the top-voted and second-most-voted class is smaller.

CD methods are evaluated with two key metrics: standard and certified accuracy. Standard accuracy is simply accuracy on clean test images. Of course, we want the CD models not only to be safe, but also perform well on their tasks. Certified accuracy is the percentage of test images that were both accurately classified and deemed robust.

State of the art @ CVPR ’22

With the mathematical guarantee, CD models sound like the clearly superior choice. So why are only two out of tens of adversarial attack papers at CVPR ’22 using certified defense? Besides the general difficulty to come up with mathematical proofs for AI models, CD models exhibit far worse performance in key metrics than non-certified ones. All performance figures reported in the remainder of this text are on the ImageNet dataset, certified accuracy is reported for patches that cover 1% of the image. Salman et al. mention that pre-CVPR ’22 CD models have roughly 25% lower standard accuracy and inference time higher by up to two orders of magnitude. That’s “minus 25%” standard accuracy, not “multiplied by 0.75”. The slow inference time is outright crippling—taking up to 100-1000x the time of a regular model to decide on an input essentially rules out deployment in practice. In return, the user of a pre-CVPR ’22 CD model gets certified accuracy of around 35%. This is a very steep price to pay.

| Standard accuracy | Certified accuracy | Inference time | |

|---|---|---|---|

| ViT-B | 81.8% | N/A | 0.95 s |

| PatchDefense | 55.1% | 32.3% | ? |

| Chen et al. (acc) | 78.6% | 47.4% | 40.5 s |

| Chen et al. (fast) | 73.5% | 46.8% | 16.6 s |

| Salman et al. (acc) | 73.2% | 43.0% | 58.7 s |

| Salman et al. (fast) | 68.3% | 36.9% | 3.2 s |

And that is exactly the point of the two works on certified patch defense using vision transformers. Both works have aimed to increase standard and certified accuracy and reduce the inference time, and the results are summarized in Table 1. For both Chen et al. and Salman et al., the most standard-accurate (“acc”) and fastest (“fast”) variant of their methods are reported. The results are compared to the then-state-of-the-art non-CD model, ViT-B by Dosovitskiy et al. (ICLR ’21), and CD model, PatchDefense by Xiang et al. (USENIX ’21). Unfortunately, PatchDefense does not report inference time on ImageNet, but only on ImageNette, a 10-class subset of ImageNet. The next-best baseline reported by Salman et al. has inference time of 149.5 seconds, warranting the “up to 2 orders of magnitude higher” claim in comparison to ViT-B. Overall, we see that both CVPR ’22 works on certified defense have managed to greatly improve both standard and certified accuracy.

Finally, a word on the key ideas behind both papers. Chen et al. use progressive column smoothing, depicted in Fig. 3. The authors argue that a lot of semantic information is lost by just sweeping column bands of a fixed size across the image to create ablations. Instead, they perform the training in stages. In the first, the transformer is fed ablations with the column width equal to 60% of the image, and is supposed to output the entire image (column width 100%). In the second stage, the input width is 30% and the output width 60%. Finally, the input width is fixed to 19/25/37px (as seen in literature) and the output width is 30%. In each stage, they employ grid tokenization. Salman et al. drop fully-masked tokens to speed up inference. Each ablation obscures the majority of an image, and consequently most of the image tokens fed to transformer correspond to black squares. Preserving spatial information and dropping these “fluff” tokens results in the transformer actually processing only meaningful information, which makes the model more efficient.

Overall, I am quite excited about the concept of certified defense and its potential. We have seen exciting progress at CVPR ’22, and I think there’s still plenty of room to grow, especially with regards to inference time. I will certainly look out for certifiable defense papers on future conference, as I believe this subdiscipline will be increasingly important.

List of CVPR ’22 papers on certifiable defense:

- Chen et al.: Towards Practical Certifiable Patch Defense with Vision Transformer

- Salman et al.: Certified Patch Robustness via Smoothed Vision Transformers

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.