How to see properly: Adversarial defense by data inspection

Introduction

In the AI security @ CVPR ’23 post series, we have already seen a number of adversarial defense techniques that modify the model. These techniques enhance the architecture with robust components or modify the model’s weights by adversarial training. But there’s a third way, which leaves the model untouched: data inspection. Data inspection means scanning the data for adversarial attacks using an additional “inspector” model. If the inspector model detects an attack, we can either discard the attacked data or try to restore them to their clean state, depending what suits our needs.

There are two good reasons to consider data inspection for improved adversarial defense. Firstly, “measure twice, cut once” is a good general engineering principle. Checking our situation before taking a decision is rarely ill-advised. Secondly, data inspection has great potential to alleviate the robust vs. clean accuracy trade-off. If we want to train the model to resist attacks, we have to sacrifice some of the clean accuracy. With a reliable inspector, however, we do not have to modify the model and therefore sacrifice anything.

To me, it is surprising that data inspection is a niche AI security research category. In this post, I summarize 3 papers from CVPR ’23 that fall under the data inspection category—and as I explain below, only two papers really fall fully under data inspection. Perhaps the difficulty lies in the fact that an inspector model is itself an AI model, and therefore itself susceptible to adversarial attacks. At any rate, data inspection is in my opinion a worthy adversarial defense strategy that deserves attention.

Countering with counterfactuals

Jeanneret et al. connect adversarial defense with explainable AI, namely counterfactual explanations (CE). For the given data input, CE models answer the question what needs to change in the data for the current class label to change into another? The answer should be a minimal, complete, and actionable explanation. Receiving an explanation, rather than just the output label, is certainly welcome in human-AI interactions. For example, if a website rejects an ID photo of ourselves we just submitted, receiving “please move your head towards the center” is much more helpful than “invalid photo”. The focus of this work are visual CE models. Visual CE models output a modified input image with just enough semantic changes to be classified into the target class.

Jeanneret et al. observe that visual CE and adversarial attacks have essentially the same goal: to flip a target image’s class with minimal changes to the image. The main difference is that unlike general adversarial attacks, CEs enforce semantic changes. Otherwise, the visual explanation would be meaningless to a human. That said, this difference is not a dealbreaker. Jeanneret et al. observe that attacks against robust models are themselves increasingly semantic in nature. This aligns visual CE and certain adversarial attacks almost perfectly.

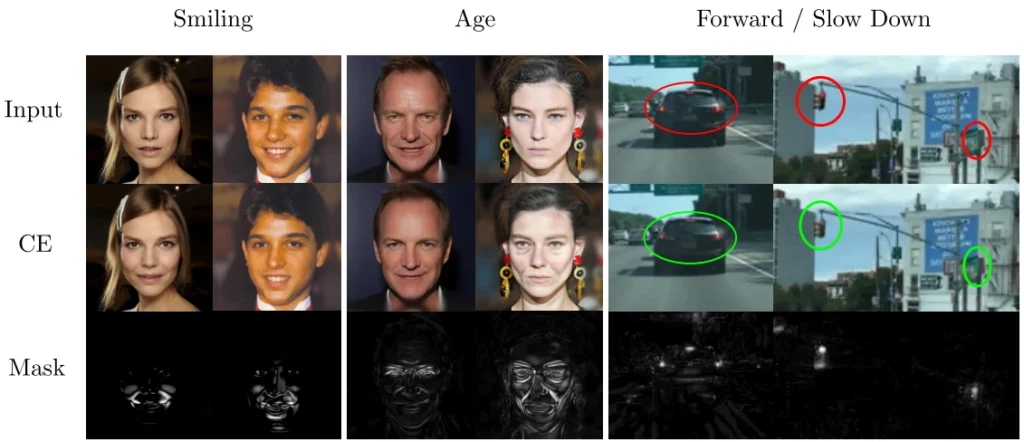

One use of visual CE for adversarial defense is generating natural adversarial examples to be used for adversarial training. Fig. 1 shows how an image class can be flipped with minimal changes to the original image. The use for data inspection I’d imagine would be inspection after our main AI model finishes inference. The CE model takes the input image and tries to flip the class from the output class to a semantically similar class. If the resulting counterfactual example features semantic changes that match the semantic change between the classes, then the image is likely clean. On the other hand, if the explanation does not make sense, there is a high likelihood of attack. Let’s use a toy face verification example to illustrate: our model classifies an input image as an authenticated person. Before letting that person in, the inspector CE model further checks with the CE model and asks “what changes are needed for this human face to be classified as smiling/frowning/sad/… face”. Turns out the difference between the image and the counterfactual examples looks nothing like the bottom left masks in Fig. 1, it’s random noise. The verification pipeline rejects the authentication, and manual inspection reveals the image is a PGD-based adversarial attack using a noisy adversarial perturbation.

A good reflection

The next paper I have classified under the data inspection category is Robust Single Image Reflection Removal Against Adversarial Attacks by Song et al. OK, I have a confession to make. This was a misclassification on my end—reading the title and abstract to filter the papers, I thought the paper about scanning for adversarial attacks that use reflection as adversarial perturbation. However, it is about making single image reflection removal (SIRR) models more robust to adversarial attacks. The authors propose adversarial attacks against SIRR models and means to defend the attacks. This means that this paper would best fit in the adversarial attacks category. Nevertheless, I still think there is value in looking at this specific work in the data inspection context.

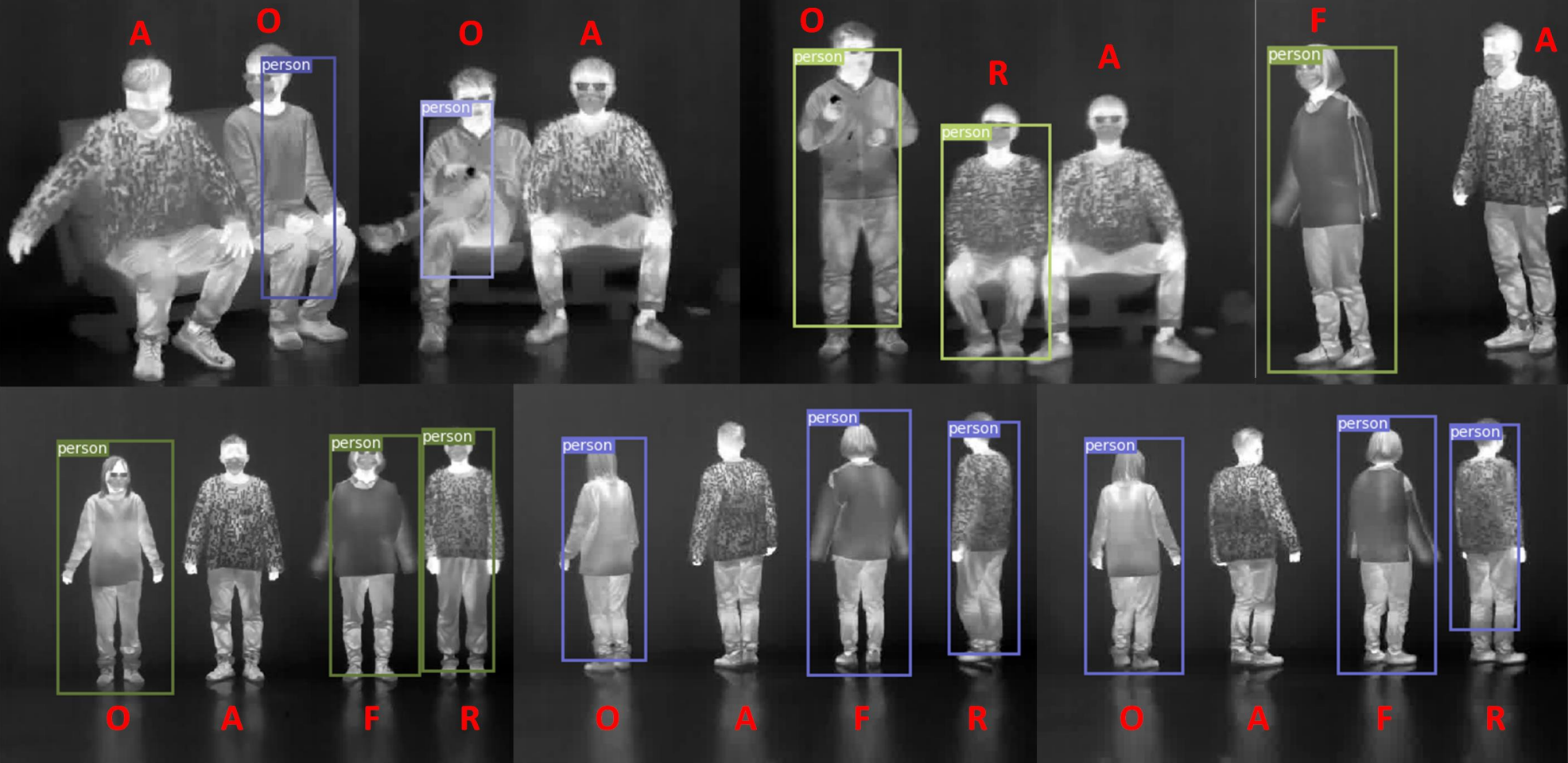

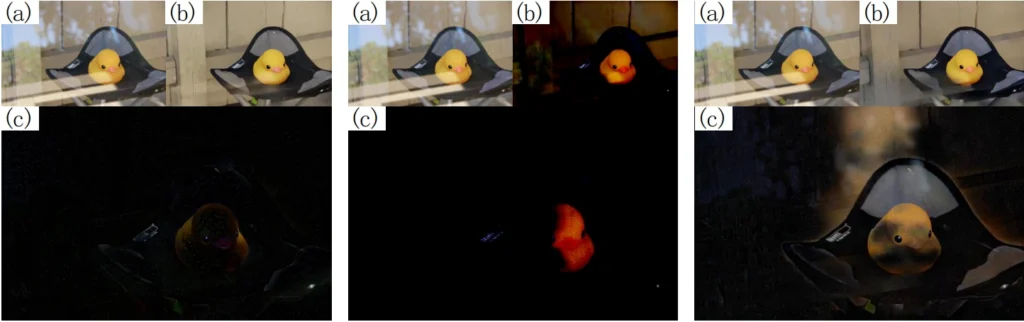

Fig. 2 shows that much like other models, SIRR models are susceptible to adversarial attacks: attacked images come out unusably dark. To remedy this, Song et al. first propose a set of PGD-based adversarial attacks on SIRR models. The attacks differ in regions they attack: the whole image, the regions with reflections, or the regions without reflections. As a method to bolster adversarial defense, Song et al. propose three techniques. Firstly, multi-scale processing. Since an adversarial perturbation is created with a certain image scale in mind, rescaling the image has been shown as a simple technique likely to destroy it. Secondly, using attention blocks for feature extraction. Whilst transformers are not robust by design, CNN-optimized attacks won’t work as easily against attention layers. Moreover, transformers bolster the robustness of the related deraining task, so they are relevant for SIRR as well. Finally, employing the AID detector. The robustified SIRR model indeed demonstrates better adversarial defenses and counters adversarial attacks successfully.

So how about that data inspection use? Assume the attacker uses adversarial image reflection as his adversarial perturbation of choice. Deploying a robust SIRR inspector model would highly likely counter an adversarial reflection attack. The SIRR model is itself robust to adversarial attacks, so it will simply remove the reflection as a pre-processing step whilst preserving the information about the object that is the actual focus of the image. The “repaired” harmless image would then be fed to our AI model, which would in turn output the correct class. I think robust models specialized in specific image processing tasks have a great potential to be good data inspectors and boost adversarial defense.

From frequency to entropy

Last, but not least, the paper by Tarchoun et al. proposes a model that removes adversarial patches. Removing adversarial patches and ideally also repairing the image are core data inspection tasks. Existing approaches have mostly focused on the frequency characteristics of the inspected image. Classic adversarial patches are colorful and noisy, creating noticeable high-frequency regions. However, frequency-based patch removal approaches fail against more modern adversarial patch methods that introduce semantic changes in the image. To overcome this, Tarchoun et al. propose an entropy-based patch removal method.

I like papers where the intro figure gives a clear overview of the method, and this paper is a bright example of this—Fig. 3 shows the pipeline of the method. First, the method detects entropy peaks in the image, and employs an auto-encoder for patch localization. The patch is cut out and finally, inpainting samples the data surrounding the “hole” in the image to fill it in with plausible content matching what would be the clean image as faithfully as possible. The authors note that the inpainting result does not have to be pixel-perfect, just plausible enough to defuse the patch and look reasonable. This method shows state-of-the-art performance on patch removal and good adaptation to modern adversarial patch attacks.

List of papers

- Jeanneret et al.: Adversarial Counterfactual Visual Explanations

- Song et al.: Robust Single Image Reflection Removal Against Adversarial Attacks

- Tarchoun et al.: Jedi: Entropy-Based Localization and Removal of Adversarial Patches

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.