Better model architecture, better adversarial defense

Introduction

Alright, let’s dive fully into adversarial defense in our AI security @ CVPR ’23 series. Adversarial defense papers don’t present new attacks, but rather look to bolster model defenses against various existing attacks. There has been a noticeable increase in the number of adversarial defense papers between CVPR ’22 and ’23. If we “painted” the conferences using the classic security coloring (attackers = red, defenders = blue), then CVPR ’22 is red and CVPR ’23 purple. Notably, 3 out of 4 AI security official paper highlights at CVPR ’23 are on adversarial defense, so it is certainly a topic to be taken seriously.

Last week’s post has compared model architectures to each other in terms on robustness, drawing from the work of A. Liu et al. This week we look at the 9 remaining papers that seek to bolster adversarial defense by making the architecture of the model more robust.

The grand design

Firstly, let’s cover papers that take a holistic approach, considering the architecture as a whole. In the previous posts, we have already seen two remarkable papers falling under this category. Firstly, the Generalist paper by Wang & Wang that has received the CVPR ’23 highlight distinction. Instead of a single model, Generalist trains three models with the same architecture: one optimizing natural accuracy, one optimizing robust accuracy, and the third global model weaves the best of the two worlds together. Secondly, the paper by A. Liu et al. paper covered last week that provides the data for a detailed architecture robustness comparison falls under this category too. Let’s now look at the two final papers that take a holistic approach.

Croce et al. follow the same conceptual line as Wang & Wang: instead of a single model, they optimize several models which are then aggregated. Croce et al. propose to use model soups in adversarial setting. Model soups interpolate the parameters of multiple models, where each model may be optimized for a different purpose. In this work, the individual models are each optimized to defend against a different \(l\)-norm attack, namely \(l_1\), \(l_2\), and \(l_{\infty}\)-norm attacks. Each model soup is then a convex combination of the individual models. The choice of the combination weights translates directly to which attacks are going to be defended the best. Expectedly, there is a trade-off and we cannot 100% defend against everything at the same time. Fig. 1 demonstrates the performance of ResNet-50 and ViT-B16 model soups with various convex combination parameter choices. Croce et al. further state that models may be put in a soup even if they differ in architecture somewhat, as long as functionally matching components are interpolated with each other. This makes the method quite flexible.

The paper by B. Huang et al. looks at knowledge distillation which operates in a teacher-student setting. The larger teacher model teaches the smaller student model the given task, distilling the knowledge in the process. The student model is able to solve the task whilst being smaller, so it is “denser”, distilled. B. Huang et al. note that by default, student models are especially vulnerable to adversarial attacks. Beyond the usual susceptibility shared among all models, there is no easy way to adversarially train them. Therefore, they highlight the need for adversarial distillation, a distillation process that preserves the adversarial defense capabilities of the teacher. The paper introduces adaptive adversarial distillation (AdaAD) in which the teacher and the student interact and adaptively search for the best adversarial configuration. AdaAD leverages the Kullback-Leibler divergence to minimize the discrepancy between output probabliity distributions on adversarial examples of the teacher and student models.

Manipulating features

Another approach to boost adversarial defense is to look at specific features and their contribution to model output. In the post covering CVPR ’23 AI security highlight papers, we have already seen one highlight paper on the topic, the feature recalibration paper by W. Kim et al. W. Kim et al. propose not to throw out the features that are responsible for an adversarial attack’s success, because they may still capture important information. Instead, they provide a method to recalibrate them, which preserves their useful properties and nullifies the adversarial weakness.

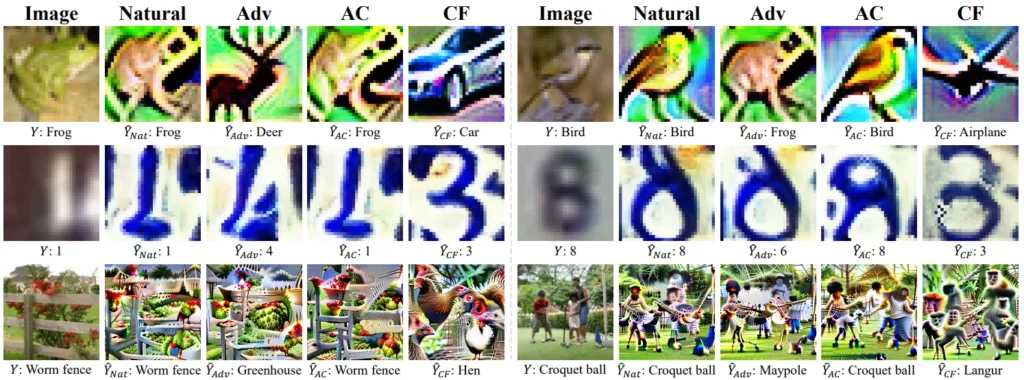

J. Kim et al. look at the features from a causal perspective, invoking instrumental variable (IV) regression, a classic statistical technique. IV regression is a tool to identify causal relationships between observed variables in randomized experimental settings. Without going into too much technical detail, IV regression uncovers a causal link between the instrument (the “causer”) variable and the observed output variable, erasing the influence of other confounding variables. To be unbiased about the environment and underlying probability distributions, the authors use the generalized method of moments (GMM) as a non-parametric estimate for IV regression. Deployed on an intermediate feature layer, the method allows to calculate the following four feature components that enable insight into the nature of an adversarial attack:

- Natural features — the features responsible for the correct classification

- Adversarial features — the features responsible for adversarial misclassification

- Adversarial causal features — adversarial features cleaned of confounders

- Counterfactual features — adversarial features pushed to the worst-case semantic interpretation (= maximum semantic misunderstanding) under the GMM method

Fig. 2 visualizes the four feature components on 6 image examples. We can see how adversarial examples indeed push the image away from its true class. We see how the method is able to “clean up” the confounders by introducing causality, as AC features would be classified into the true class. Finally, we see some interesting properties of the counterfactuals, for example in the bottom left example: bright red flowers are transformed into plausible, if slightly psychedelic, hen/rooster body parts. Finally, the authors provide a method to leverage this knowledge to inoculate the given intermediate feature layer against adversarial attacks. This is an interesting paper: highly technical—indeed took me a while to summarize—but it gives interesting insights once you chew through the math. And also, by now it’s probably clear I have a thing for deploying classic stats methods in modern machine learning…

In the final paper falling under this category, Dong & Xu focus on injecting randomness into features to combat adversarial attacks. It’s a simple concept: if a part of the model’s inference is random, then the attacker has no way to fully optimize the attack against the model. Existing work has usually injected Gaussian noise into the features, but Dong & Xu propose a stronger technique: introducing random projection filters. Random projection is a dimensionality reduction technique for Euclidean spaces that, as the name hints, randomly projects high-dimensional points into a lower-dimensionality space. Random projection preserves the original distances and the error introduced by the dimensionality reduction is reasonable. Dong & Xu introduce random projection filters as layers in the defense-boosted models, improving adversarial robustness.

Tweaking specific architectures

The final three papers of this post all concern adversarial defense of specific model architectures. S. Huang et al. revisit residual networks (ResNets), providing several practical observations, from which I would highlight three. Firstly, they recommend pre-activation, i.e., putting activation functions before convolutional layers. Secondly, they note that using squeeze-and-excitation (SE) blocks presents a severe adversarial vulnerability. Thirdly, they note that a deep and narrow ResNet architecture is more robust than wide and shallow. To put the observations into practice, the paper proposes adversarially robust ResNet blocks with pre-activations, adversarially robust SE modules, and robust scaling of the net towards the deep and narrow architecture. Finally, these components are put in a single robust ResNet that is shown to be more resilient to adversarial attacks.

Li & Xu focus on vision transformer (ViT) models, namely the trade-off between natural and robust accuracy. Since ViTs are expensive to train and fine-tune, Li & Xu propose an inexpensive method to select the model’s position on the trade-off. Their TORA-ViT adds a pre-trained robustness and natural accuracy adapter after the fully connected layers, with the contribution of the two adapters to the final output being tunable by the parameter \(\lambda\) (higher \(\lambda\) means more robustness). Fig. 3 shows the visualization of attention to various parts of the image under different \(\lambda\). Interestingly enough, the robustness adapter focuses on the particular object to be classified, whereas the accuracy adapter looks at the overall context of the image.

Finally, the paper of Xie et al. looks at the adversarial defense capabilities of graph neural networks (GNNs). They too are naturally susceptible to adversarial attacks. As the paper’s title suggests, Xie et al. propose a search method aiming to find a robust neural architecture. The criterion of robustness is the Kullback-Leibler (KL) divergence between the predictions on clean and adversarially perturbed data. Since the search space can explode rather quickly when considering GNN architectures, Xie et al. engage an evolutionary algorithm as a tractable estimate for the optimization. The GNN architectures found by the proposed method indeed show a greatly improved robustness compared to the vanilla GNNs.

List of papers

- Croce et al.: Seasoning Model Soups for Robustness to Adversarial and Natural Distribution Shifts

- Dong & Xu: Adversarial Robustness via Random Projection Filters

- B. Huang et al.: Boosting Accuracy and Robustness of Student Models via Adaptive Adversarial Distillation

- S. Huang et al.: Revisiting Residual Networks for Adversarial Robustness

- J. Kim et al.: Demystifying Causal Features on Adversarial Examples and Causal Inoculation for Robust Network by Adversarial Instrumental Variable Regression

- W. Kim et al.: Feature Separation and Recalibration for Adversarial Robustness

- Li & Xu: Trade-Off Between Robustness and Accuracy of Vision Transformers

- A. Liu et al.: Exploring the Relationship Between Architectural Design and Adversarially Robust Generalization

- Wang & Wang: Generalist: Decoupling Natural and Robust Generalization

- Xie et al.: Adversarially Robust Neural Architecture Search for Graph Neural Networks

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.