Cheatsheet of AI security papers from CVPR ’22

In my AI security @ CVPR ’22 blog post series, I cover all AI security papers that were presented at the conference. This post is a cheatsheet with links. The papers are categorized by their contribution, using the same categorization as the blog posts: each clickable heading takes you to the blog post covering the respective category.

Adversarial attacks

Classic adversarial attacks

- Byun et al.: Improving the Transferability of Targeted Adversarial Examples through Object-Based Diverse Input

- Cai et al.: Zero-Query Transfer Attacks on Context-Aware Object Detectors

- Dhar et al.: EyePAD++: A Distillation-based approach for joint Eye Authentication and Presentation Attack Detection using Periocular Images

- Feng et al.: Boosting Black-Box Attack with Partially Transferred Conditional Adversarial Distribution

- He et al.: Transferable Sparse Adversarial Attack

- Jia et al.: LAS-AT: Adversarial Training with Learnable Attack Strategy

- Jin et al.: Enhancing Adversarial Training with Second-Order Statistics of Weights

- Lee et al.: Masking Adversarial Damage: Finding Adversarial Saliency for Robust and Sparse Network

- Li et al.: Subspace Adversarial Training

- Liu et al.: Practical Evaluation of Adversarial Robustness via Adaptive Auto Attack

- Lovisotto et al.: Give Me Your Attention: Dot-Product Attention Considered Harmful for Adversarial Patch Robustness

- Luo et al.: Frequency-driven Imperceptible Adversarial Attack on Semantic Similarity

- Pang et al.: Two Coupled Rejection Metrics Can Tell Adversarial Examples Apart

- Sun et al.: Exploring Effective Data for Surrogate Training Towards Black-box Attack

- Tsiligkaridis & Roberts: Understanding and Increasing Efficiency of Frank-Wolfe Adversarial Training

- Xiong et al.: Stochastic Variance Reduced Ensemble Adversarial Attack for Boosting the Adversarial Transferability

- Vellaichamy et al.: DetectorDetective: Investigating the Effects of Adversarial Examples on Object Detectors

- Wang et al.: DST: Dynamic Substitute Training for Data-free Black-box Attack

- Xu et al.: Bounded Adversarial Attack on Deep Content Features

- Yu et al.: Towards Robust Rain Removal Against Adversarial Attacks: A Comprehensive Benchmark Analysis and Beyond

- C. Zhang et al.: Investigating Top-k White-Box and Transferable Black-box Attack

- Jianping Zhang et al.: Improving Adversarial Transferability via Neuron Attribution-Based Attacks

- Jie Zhang et al.: Towards Efficient Data Free Black-box Adversarial Attack

- Zhou et al.: Adversarial Eigen Attack on Black-Box Models

Certifiable defense

- Chen et al.: Towards Practical Certifiable Patch Defense with Vision Transformer

- Salman et al.: Certified Patch Robustness via Smoothed Vision Transformers

Non-classic adversarial attacks

- Berger et al.: Stereoscopic Universal Perturbations across Different Architectures and Datasets

- Chen et al.: NICGSlowDown: Evaluating the Efficiency Robustness of Neural Image Caption Generation Models

- Dong et al.: Improving Adversarially Robust Few-Shot Image Classification With Generalizable Representations

- Gao et al.: Can You Spot the Chameleon? Adversarially Camouflaging Images from Co-Salient Object Detection

- Huang et al.: Shape-invariant 3D Adversarial Point Clouds

- Li et al.: Robust Structured Declarative Classifiers for 3D Point Clouds: Defending Adversarial Attacks with Implicit Gradients

- Özdenizci et al.: Improving Robustness Against Stealthy Weight Bit-Flip Attacks by Output Code Matching

- Pérez et al.: 3DeformRS: Certifying Spatial Deformations on Point Clouds

- Ren et al.: Appearance and Structure Aware Robust Deep Visual Graph Matching: Attack, Defense and Beyond

- Schrodi et al.: Towards Understanding Adversarial Robustness of Optical Flow Networks

- Thapar et al.: Merry Go Round: Rotate a Frame and Fool a DNN

- Wang et al.: Bandits for Structure Perturbation-based Black-box Attacks to Graph Neural Networks with Theoretical Guarantees

- Wei et al.: Cross-Modal Transferable Adversarial Attacks from Images to Videos

- Zhang et al.: 360-Attack: Distortion-Aware Perturbations From Perspective-Views

- Zhou & Patel: Enhancing Adversarial Robustness for Deep Metric Learning

Physical adversarial attacks

- Hu et al.: Adversarial Texture for Fooling Person Detectors in the Physical World

- Liu et al.: Segment and Complete: Defending Object Detectors against Adversarial Patch Attacks with Robust Patch Detection

- Suryanto et al.: DTA: Physical Camouflage Attacks using Differentiable Transformation Network

- Zhang et al.: On Adversarial Robustness of Trajectory Prediction for Autonomous Vehicles

- Zhong et al.: Shadows can be Dangerous: Stealthy and Effective Physical-world Adversarial Attack by Natural Phenomenon

- Zhu et al.: Infrared Invisible Clothing: Hiding from Infrared Detectors at Multiple Angles in Real World

Backdoor/Trojan attacks

- Chen et al.: Quarantine: Sparsity Can Uncover the Trojan Attack Trigger for Free

- Feng et al.: FIBA: Frequency-Injection based Backdoor Attack in Medical Image Analysis

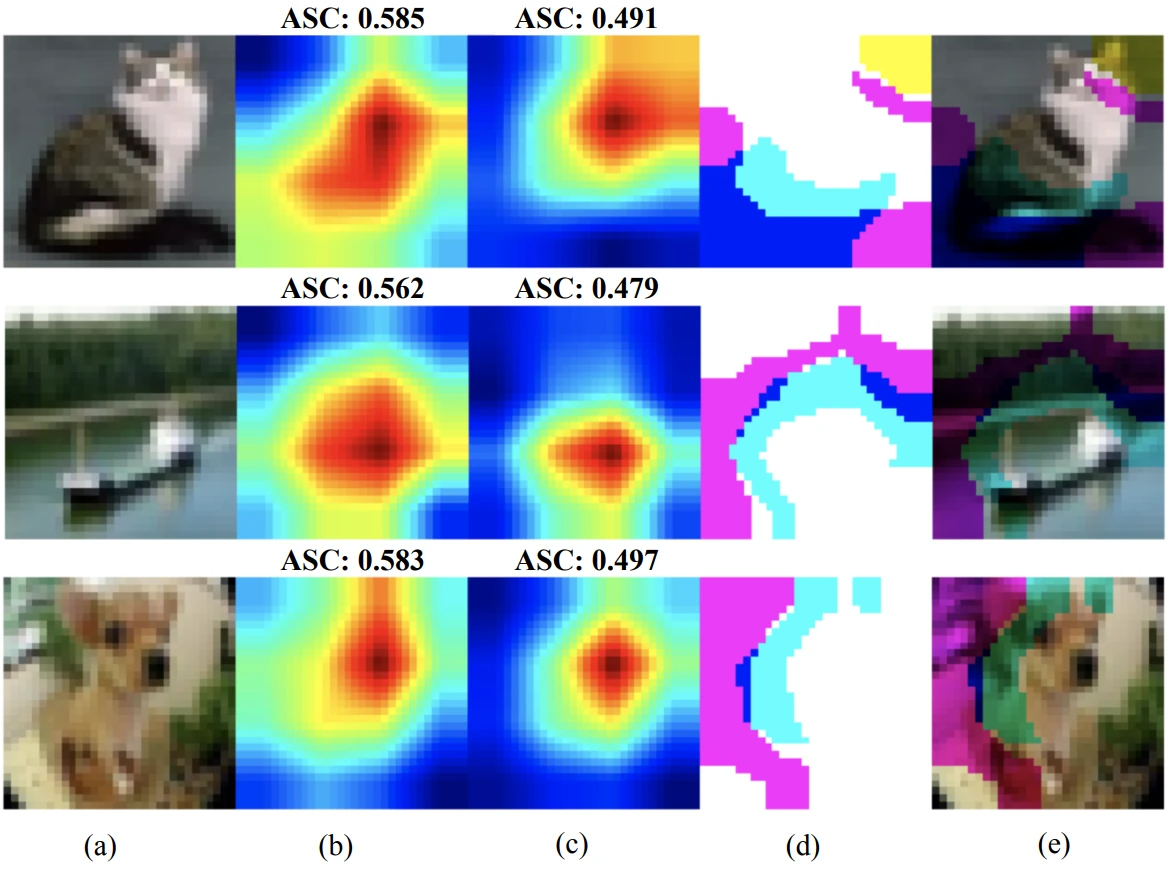

- Guan et al.: Few-shot Backdoor Defense Using Shapley Estimation

- Liu et al.: Complex Backdoor Detection by Symmetric Feature Differencing

- Qi et al.: Towards Practical Deployment-Stage Backdoor Attack on Deep Neural Networks

- Saha et al.: Backdoor Attacks on Self-Supervised Learning

- Tao et al.: Better Trigger Inversion Optimization in Backdoor Scanning

- Walmer et al.: Dual-Key Multimodal Backdoors for Visual Question Answering

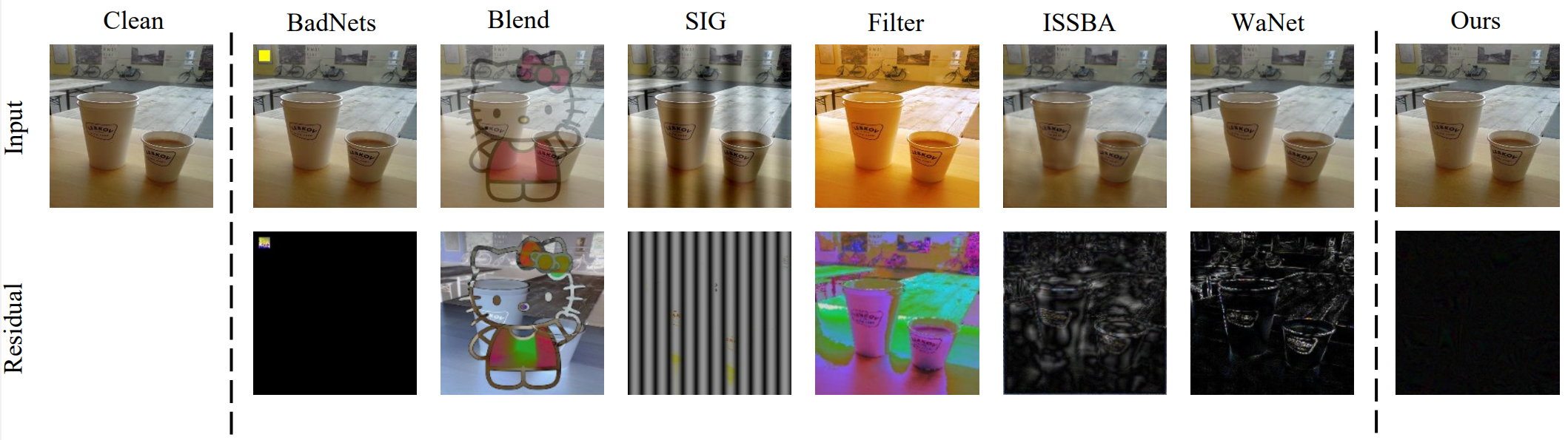

- Wang et al.: BppAttack: Stealthy and Efficient Trojan Attacks against Deep Neural Networks via Image Quantization and Contrastive Adversarial Learning

- Zhao et al.: DEFEAT: Deep Hidden Feature Backdoor Attacks by Imperceptible Perturbation and Latent Representation Constraints

Model inversion attacks

- Del Grosso et al.: Leveraging Adversarial Examples to Quantify Membership Information Leakage

- Hatamizadeh et al.: GradViT: Gradient Inversion of Vision Transformers

- Kahla et al.: Label-Only Model Inversion Attacks via Boundary Repulsion

- Kim: Robust Combination of Distributed Gradients Under Adversarial Perturbations

- J. Li et al.: ResSFL: A Resistance Transfer Framework for Defending Model Inversion Attack in Split Federated Learning

- Z. Li et al.: Auditing Privacy Defenses in Federated Learning via Generative Gradient Leakage

- Lu et al.: APRIL: Finding the Achilles’ Heel on Privacy for Vision Transformers

- Ng et al.: NinjaDesc: Content-Concealing Visual Descriptors via Adversarial Learning

- Peng et al.: Fingerprinting Deep Neural Networks Globally via Universal Adversarial Perturbations

- Sanyal et al.: Towards Data-Free Model Stealing in a Hard Label Setting

Image manipulation & deepfake detection

- Asnani et al.: Proactive Image Manipulation Detection

- Chen et al.: Self-supervised Learning of Adversarial Example: Towards Good Generalizations for Deepfake Detection

- Hu et al.: Protecting Facial Privacy: Generating Adversarial Identity Masks via Style-robust Makeup Transfer

- Jia et al.: Exploring Frequency Adversarial Attacks for Face Forgery Detection

- Shiohara & Yamasaki: Detecting Deepfakes with Self-Blended Images

- Wu et al.: Robust Image Forgery Detection Over Online Social Network Shared Images

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.