How secure is computer vision? AI & security at CVPR ’22

Computer vision (CV) is one of the vanguards of AI, and its importance is rapidly surging. For example, CV models perform personal identity verification, assist physicians in diagnosis, enable self-driving vehicles. There has been a remarkable increase in performance of CV models, dispelling much of the doubt related to their efficiency. With their increased involvement in critical, sensitive real-world decisions, another question is being asked more and more frequently: How secure is computer vision? With CV’s vanguard position in AI, this question is of core importance in AI & security as a whole.

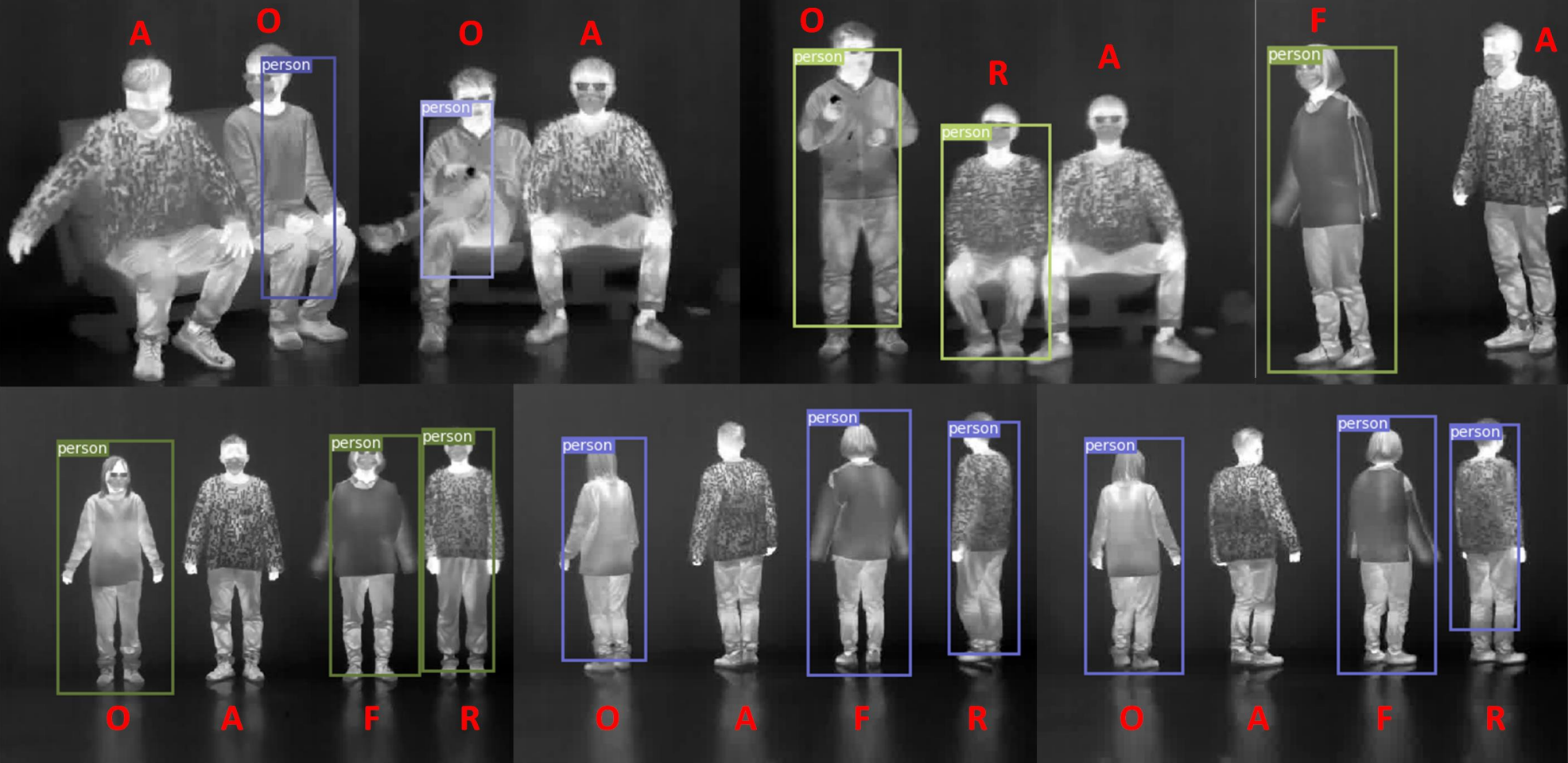

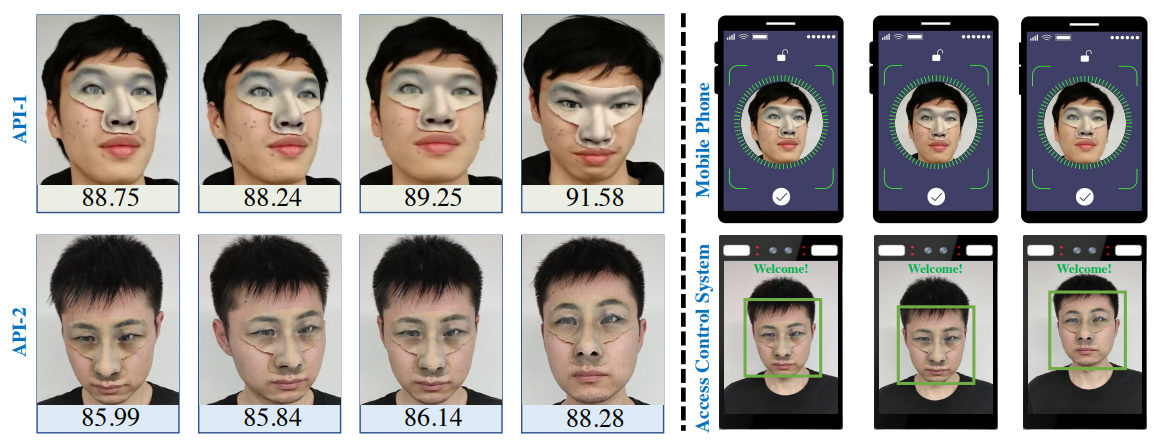

The honest answer is: not that secure. There are various ways to trick a CV model into behaving the way not intended by its creator, but desired by the attacker. For example, as shown in Fig. 1, a carefully crafted shadow can throw a traffic sign detector off and induce dangerous behavior of a self-driving vehicle. Face identification models can be fooled to authenticate a different person. Private data used to train a CV model can be faithfully reconstructed from the model, as shown in Fig. 2. More often than not, the attacks are stealthy and inconspicuous, the images used for an attack look completely benign. This makes the attacks even more dangerous.

There is a silver lining: it has been known for several years now that CV models are susceptible to these attacks, and research in computer vision security is very active. In this blog post series, I cover the results presented at the 2022 rendition of the top CV conference, IEEE/CVF Computer Vision and Pattern Recognition Conference (CVPR).

CVPR ’22 has seen a record number of submissions and consequently a record number of 2063 accepted papers. It would be an ordeal to go through them one by one, so I adopted a heuristic search to get the list of security-related papers:

- Title keyword search, searched key words and phrases: adversarial, backdoor, trojan, attack, defense, model inversion.

- Adding all papers in the conference’s security-titled sessions. At CVPR ’22, I have identified 1 oral and 3 poster sessions of relevance:

- Oral 3.2.1: Security, Transparency, Fairness, Accountability, Privacy & Ethics in Vision

- Privacy and Federated Learning (poster session)

- Transparency, Fairness, Accountability, Privacy & Ethics in Vision (poster session)

- Adversarial Attack & Defense (poster session)

- Filter the title & abstract of papers resulting from previous steps to ensure the paper is directly related to security. I have only considered papers that explicitly deal with attacks against CV models or defense against them.

This search yielded a total of 73 papers. This means that 3.5% of CVPR ’22 papers were on security research. Compared to CVPR ’17, this is a massive surge, as that year saw only 1 security paper out of 783 accepted, or 0.1% of all submissions. Note that by 2017, the scientific community has known for 3+ years that CV models can be attacked, so the low number was not caused by nobody admitting there could be security problems. The stats therefore support the claim that computer vision security research is very active.

I have read all 73 papers, categorized them into 4 major categories, and over a couple of blog posts, I would like to convey a summary of the researched topics and results. The categories are as follows, each available link sends you to the paper summary per category:

- Adversarial attacks (47 papers)

- Adversarial attacks terminology, classic attacks (24 papers)

- Certifiable defense @ CVPR ’22 (2 papers)

- Non-classic adversarial attacks (15 papers)

- Physical adversarial attacks (6 papers)

- Backdoor attacks (10 papers)

- Model inversion, model/data stealing (10 papers)

- Image manipulation, deepfake detection (6 papers)

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.