What’s more powerful than one adversarial attack?

Multiple adversarial attacks, of course. So far, on this blog, I have covered scientific works that perform a single adversarial attack. Hinting that collectively, these attacks might be a pretty rich-equipped toolbox, but only hinting. Today is the time to actually put a toolbox together: this post covers AutoAttack, the pioneer ensemble adversarial attack that tries a variety of perturbations to penetrate the target’s defenses. AutoAttack has become a staple in evaluating an AI model’s adversarial defenses, so let’s unpack.

First, a bit of motivation. There’s a good reason for ensembling one’s attacks. Succeeding with a single attack is pretty much only possible in films. You know, the Hollywood “chess prodigy” hackers that stare at computery-looking meaningless 3D visualizations, then say “oh he’s good” about the adversary, and then save the world in the last possible moment with a single attack/defense technique… In real cybersecurity, you have to try a lot of possible attacks if you are an attacker, and you have to defend against a wide variety of attacks if you are a defender. So, how to perform ensemble attacks in the AI domain, given they have proven their mettle in classic cybersecurity?

Maximizing damage by autoattacking

AutoAttack, the pioneer and still golden standard ensemble adversarial attack, has been introduced in the seminal ICML ’20 paper by Croce and Hein. By 2020, the palette of adversarial attacks had already become quite colorful. However, most adversarial defense works of the time validated their robustness solely against models adversarially trained on projected gradient descent (PGD) or fast gradient sign method (FGSM), which is essentially one-step PGD. PGD certainly is the uncontested king of adversarial attacks—this has been true in 2020 and remains true in 2024—but it is not the be-all-end-all. As a result, reporting only PGD results overestimates the adversarial robustness of the evaluated model. And that is a problem.

Croce and Hein set out to fix it, proposing three steps:

- Fixing the problems of vanilla PGD

- Fixing the loss function used to construct the attack

- Ensembling PGD with other diverse attacks to better test the model’s versatility

In the following sections, we’ll look at them in more detail.

Holes in hole finding

PGD, an algorithm that looks for “holes in the manifold”, has some holes itself. Basically, PGD does not really “know” where it is going. It can be likened to a fit, but somewhat clueless tourist freshly arriving in the mountains. It knows which way the mountain is, wastes no time ascending it, and arrives at the peak in no time if the slope is continuous… but sometimes, the mountain terrain is a bit more jagged and treacherous, and that’s where newbie tourists fail sometimes.

Namely, Croce and Hein identify three main problems of vanilla PGD. Firstly, the fixed step size PGD takes, which does not guarantee convergence. Secondly, PGD is attack budget agnostic, which often results in the loss plateauing after a few iterations. This in turn results in the attack potency not being proportional to the number of iterations. Finally, PGD focuses solely on the gradient descent and has no clue about how successful the actual attack is. All in all, the key parameters of the journey have to be set beforehand, with limited prior knowledge of the terrain, and PGD cannot adapt to the circumstances encountered on the go. An inexperienced mountain tourist indeed.

To counter this weakness, Croce and Hein propose Auto-PGD. As the first countermeasure, Auto-PGD introduces momentum to break out of local optima. Its step size is dynamic: the algorithm can automatically determine whether it has reached a checkpoint, and if it has, it can decide whether to change the step size. If it does, the search is restarted from the best-result point achieved so far. Overall, Auto-PGD can switch between exploration and exploitation, and the only free parameter is the number of iterations.

The model’s loss, the attacker’s gain

The loss function used in vanilla PGD is the cross-entropy (CE) loss—makes sense, CE loss is the loss function used in training most models. However, Croce and Hein show that robustness results resulting from PGD+CE can be manipulated by simple rescaling of the model decisions by a constant, due to CE suffering from vanishing gradients. A 2017 paper by Carlini and Wagner proposes a number of alternative losses, the most promising one being the difference of logits loss, dubbed CW (Carlini-Wagner) loss in the AutoAttack paper:

\(CW(x, y) = -z_y + \max_{i \neq y} z_i \)

The notation follows the ML standard: \(x\) refers to the data, \(y\) to the class labels, \(z\) to the logits. Croce and Hein note that this loss, too, is not scale invariant, and propose the scale- and shift-invariant difference of logits ratio (DLR) loss:

\(DLR(x, y) = -\frac{z_y – \max_{i \neq y} z_i}{z_{\pi1} – z_{\pi3}} \)

Here, \(\pi j\) refers to the \(j\)-th position of the logit in the logit ordering by value (descending). Note that this version of the DLR loss is for untargeted attacks. No worries, Croce and Hein also provide a targeted version in the paper.

Assembling the AutoAttack

With all the pieces assembled, we can move onto combining them into AutoAttack. AutoAttack is an ensemble of four attacks:

- Auto-PGD with CE loss

- Auto-PGD with DLR loss

- Fast adaptive boundary (FAB) attack (Croce & Hein, ICML ’20) — A fast white-box attack that aims to find the minimal perturbation that flips the class label.

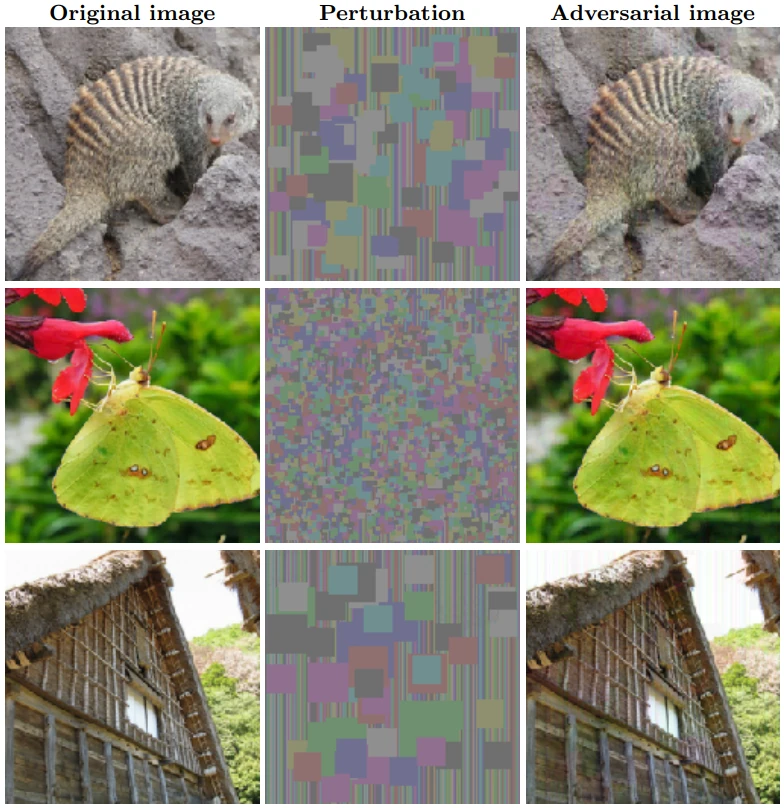

- Square Attack (Andriushchenko et al., ECCV ’20) — A query-efficient black-box attack that perturbs the input with squares instead of individual pixel changes (illustrated in Fig. 2)

AutoAttack involves running these four attacks and reporting the ensembled results. Croce and Hein have undertaken a gargantuan effort in evaluating AutoAttack’s efficiency. They pitted AutoAttack, as well as the separate Auto-PGD variants, against 50 models using 35 various defensive tactics published at top conferences at the time. The results show very convincingly that AutoAttack outputs significantly lower robustness rates of the attacked models, providing a more realistic robustness estimate. As is the case with all serious AI papers, AutoAttack’s code is open-source, facilitating easy usability.

All in all, Croce and Hein have contributed a very strong, yet reasonably fast adversarial attack, setting a strong adversarial defense evaluation standard. If you are looking to truly test your model’s security, look no further.

Subscribe

Enjoying the blog? Subscribe to receive blog updates, post notifications, and monthly post summaries by e-mail.