AI security @ CVPR ’23

CVPR ’23 has brought a large number of new, exciting AI security papers. This post kicks off a blog post series covering the work with an introduction, paper stats, and overall topical structure.

CVPR ’23 has brought a large number of new, exciting AI security papers. This post kicks off a blog post series covering the work with an introduction, paper stats, and overall topical structure.

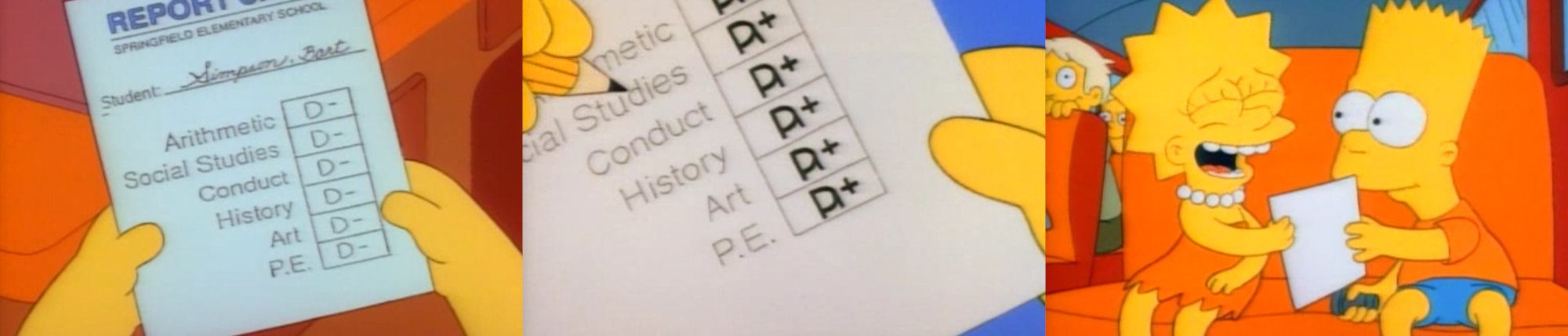

How is it possible that we can make anything look like something completely different in the eyes of an AI model? This post brings a real-world-inspired intuition of adversarial attacks on AI models.

All AI security papers from CVPR ’22 with paper link, categorized by attack type.

Image manipulation is an attack that alters images to change their meaning, create false narratives, or forge evidence. This post summarizes AI security work on this topic presented at CVPR ’22.

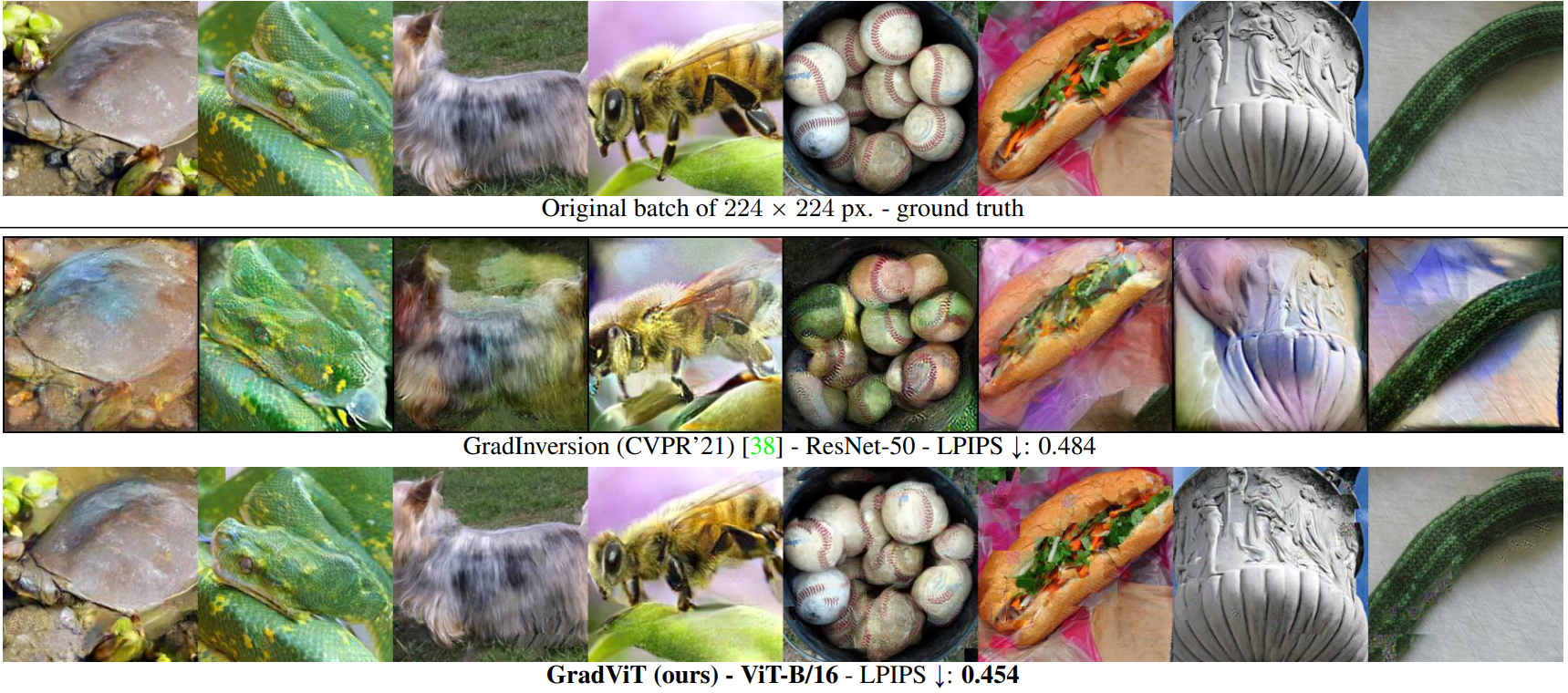

One of the key AI security tasks is protecting data privacy. A model inversion attack can steal training data directly from a trained model, which should be prevented. This post covers CVPR ’22 work on model inversion attacks.